Contemporary defense industries are quickly adopting artificial intelligence (AI) for strategic and operational purposes. From autonomous drones conducting independent targeting to decision-support tools acquiring vast quantities of battlefield data, AI is altering how wars are planned and fought. Armed forces in technologically advanced nations are embedding AI across critical functions in surveillance, logistics, cybersecurity, and command systems. Among the most debated innovations are lethal autonomous weapon systems (LAWS), forecasting targeting programs, and coordination tools powered by learning algorithms.[1] While these tools offer strategic benefits, they also raise ethical and societal concerns.

As the technology evolves, growing opposition is emerging to challenge militarized AI initiatives. Human rights groups, legal analysts, and advocacy organizations have raised issues over the loss of human judgment in life-and-death scenarios, the opacity of algorithmic decision paths, and the delegation of lethal authority to machines. This reaction is no longer confined to academic circles. It is spreading through media coverage, online forums, and national opinion surveys in democracies, where technological development must remain answerable to societal values.

Several factors help explain the roots of this growing unease. The lack of clarity in machine-driven decisions, the risks of error or bias, and the fear of autonomous weapons acting without meaningful human control are key issues. Incidents involving misfires from drone systems, unresolved questions raised in United Nations (UN) debates, and troubling outcomes from AI audits have all contributed to increased scrutiny. As the capability of the systems expands, so does the fear of surrendering moral responsibility to machines. This has triggered debates about legitimacy, both in terms of military operations and the democratic systems overseeing them. What’s at risk is not only future battlefield outcomes but also the foundational trust between civilian populations and their governments. Without responsive safeguards, the disconnect between AI development and public confidence could create challenges for maintaining defense credibility and public trust in democratic institutions.[2]

This insight explores the mounting tension between military innovation and public accountability in AI defense initiatives. It assesses the ethical challenges linked to autonomous systems and examines how governments are responding. Public attitudes are becoming influential in directing the future of AI in democratic contexts. As resistance builds, lawmakers are under pressure to ensure that technological progress aligns with societal expectations. Meeting this demand calls for integrating human-first principles into military AI frameworks, reinforcing the connection between defense policy and public legitimacy.

Ethical and Societal Concerns Surrounding Military AI

The introduction of AI within military frameworks has changed warfare dynamics, sparking ethical and civil dilemmas. Chief among them is the erosion of human involvement in critical decisions, where machines are allowed to determine matters of life and death, as in the case of LAWS.

Armed forces frequently implement AI to improve detection functions, reduce reaction durations, and minimize soldier vulnerability. However, such approaches also introduce notable uncertainties in liability. If an autonomous platform operates erroneously, culpability grows unclear, creating uncertainties about both legal responsibility and ethical justification. This danger has manifested in unstable conflict zones such as Gaza and Ukraine, where system failures and classification errors resulted in significant civilian casualties.[3] A 2021 global Ipsos survey found that 55% of U.S. respondents opposed the use of fully autonomous weapons systems, citing concerns that such systems would be unaccountable.[4] This fear stems from documented occurrences. In Gaza, AI-assisted mechanisms were regarded as inaccurate and participated in civilian fatalities, illustrating that theoretical accuracy does not necessarily translate into operational reliability or adherence to humanitarian standards.[5] Considering blame attribution, whether directed at programmers, personnel, or the system, has amplified calls for regulatory measures in defense-related AI. This has been reflected in the U.S. Department of Defense’s (DoD) Inspector General audits of Project Maven’s targeting algorithms in 2022, which assessed compliance with ethical AI principles.[6]

The ongoing Israel-Gaza hostilities have faced recent incidents involving military AI. Initially, Israeli defense solutions augmented by AI did not detect and prevent Hamas’s early October attack, revealing a substantial intelligence failure,[7] comparable to the Israeli military’s misinterpretation of open-source satellite data during the 2006 Lebanon War, which resulted in missed signals of Hezbollah’s mobilization.[8] Subsequently, AI-infused targeting platforms misclassified aid workers as hostile elements according to post-incident reports, similar to the 2021 U.S. drone strike in Kabul, where an aid worker delivering water was mistakenly flagged as an ISIS-K operative due to algorithmic pattern matching errors.[9] Noncombatant deaths have experienced an increasing trend, as digitized targeting has contributed to strikes on civilian infrastructure.[10] This demonstrates how automated systems struggle to distinguish between belligerents and unintended targets in asymmetric warfare. The Defense Innovation Board recognized this issue, emphasizing that AI must stay auditable and command-responsive, corresponding with protocols released by the U.S. DoD in 2020.[11]

Accountability dilemmas also stretch past combat scenarios. When unmanned systems malfunction or databases mislabel targets, liability disperses among engineers, handlers, leadership, and component producers. As machine learning advances toward real-time adaptation in military operations, addressing faults remains a contemporary issue. This constraint led to the establishment of legal structures, including the principles of armed conflict. Robert Sparrow argues that autonomous weapon systems lacking meaningful human consideration risk causing ‘moral de-skilling’ among operators, eroding their capacity for independent ethical judgement.[12] This intuition is mirrored in survey findings. Though most American institutions admit the functional efficiency of robotic technology, a majority suspect that they incentivize conflict and advocate for international prohibition. Underlying this disagreement, surveys suggest that operational efficacy alone does not resolve public concerns regarding ethical approval. The Martens Doctrine echoes this notion, maintaining that martial conduct must align with communal ethics where regulations prove insufficient.[13] Public dissent thus conveys juridical weight aside from ideological significance.

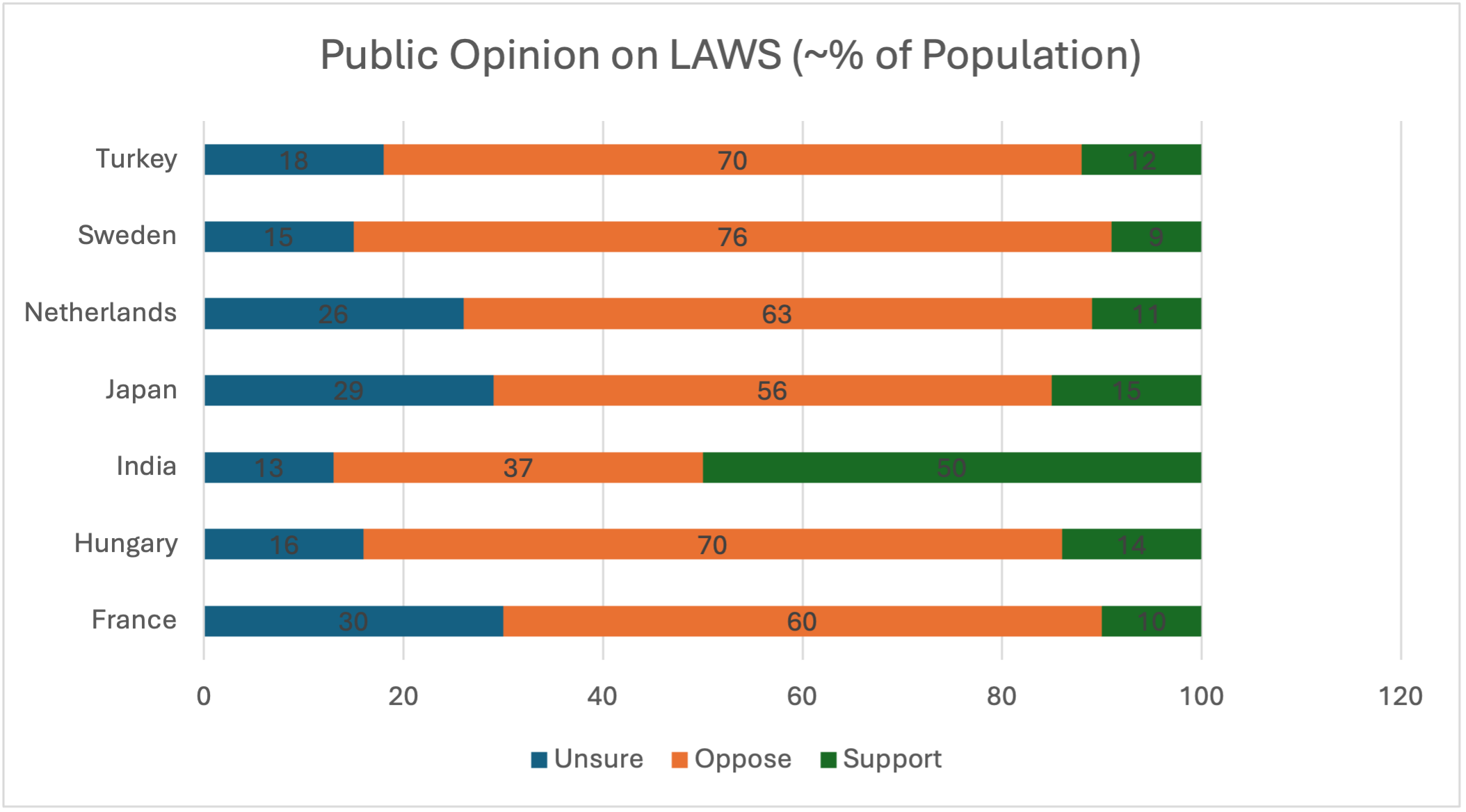

Figure 1: Public Opinion on LAWS

Source: Author’s creation using Microsoft SmartArt, adopting data from Ipsos Survey 2021

These rising concerns have drawn focus from advocacy groups, academic institutions, and relief agencies. The UN and the International Committee of the Red Cross (ICRC) have pressed for a legally binding global accord to restrict or govern LAWS by 2026.[14] Their stance is strengthened by disapproval of the Group of Governmental Experts operating under the Convention on Certain Conventional Weapons (CCW), which failed to produce a legal structure despite prolonged discussions.[15] Though the coalition has settled on eleven guidelines, their lack of binding power remains an issue. Interpretations differ notably among nations. Whereas China considers solely unstoppable systems as autonomous, France incorporates devices capable of choosing their own targets.[16] Such a discrepancy presents a substantial obstacle to any prospective worldwide treaty.

Public resistance toward artificial military intelligence opposes autonomous systems based on lethal functions lacking reliable accountability measures. In 2023, the campaign Stop Killer Robots increased global pressure on state authorities, following disclosures on Israel’s Habsora, which utilized AI-guided targeting tools in Gaza.[17] This prompted renewed debate on the use of AI in military applications, with discussions focusing on risks linked to diverging algorithms. Reports suggested that these mechanisms produced quick strike capabilities with minimal human consideration, triggering widespread unease regarding AI supervision in conflict zones. Since 2013, 30 countries have explicitly called for a ban on fully autonomous weapons, stressing that machines must not be allowed to decide matters of life and death without meaningful human control.[18] Escalating disputes have led several lawmakers across European nations, especially in Germany and the Netherlands, to call for clearer accountability in defense AI research, revealing the intensifying conflict between tactical advantage and governance obligations.[19]

Popular opinion is beginning to reflect these moral apprehensions. Resistance to LAWS has shifted from the edges of policymaking to mainstream dialogue. Researchers have endorsed petitions, demonstrators have organized rallies, and public skepticism continues to rise. Polls indicate that while Americans endorse AI’s function in defense, only 29% approve of eliminating emotional considerations from combat.[20] This suggests that ethical evaluation must stay central to the armed forces’ due diligence measures, irrespective of technical feasibility. Such opinions cut across political affiliations and are shared by both public and military figures. Although trust is being built with AI-supported protection, there is still demand for retaining human aspects.

Numerous American and European administrations have already prohibited or limited facial recognition and automated tools in policing and immigration enforcement. Armed drones have emerged as a contentious issue. While not wholly self-directed, their shift toward enhanced independence has turned them into emblems of a scenario where battles are fought by machines alone. Skeptics contend that distant handlers may experience reduced empathy for targets, a detachment exacerbated where algorithms control engagements. In Gaza, multiple drone attacks in densely populated urban zones resulted in extensive civilian harm and raised questions over the operational effectiveness of AI-assisted combat.[21]

The implementation of autonomous armaments has triggered considerable ethical discourse, especially regarding Just War Theory, stressing norms such as differentiation, appropriate response, and moral liability. Noel Sharkey points out that machines cannot interpret subtle human cues necessary to distinguish civilians from combatants,[22] which raises doubts on the ability of autonomous weapons to comply with the jus in bello principle of distinction. Additionally, a UN analysis in 2024 reinforced these apprehensions, observing that the ambiguity surrounding AI-based choices muddles legal accusations, producing no designated individual liable for transgressions.[23] Human absence in essential determinations has fueled skepticism, especially in nations where moral and juridical expectations are regarded as fundamental to justified warfare. Consequently, advocacy groups insist governments mandate rigid human-centric rules to align the deployment of weapons with humanitarian law and ethical combat principles.

The question of lethal autonomy has shifted from an abstract debate to a core issue in assessing warfare, ethics, and global standards. Recent polls in the U.S. reveal widespread resistance to self-governing weapons, driven by unease about accountability, human dignity, and violence spread. The Martens Clause continues to serve as a vital legal doctrine, stressing that communal morals must direct armed choices when existing laws prove insufficient. Still, present structures have found it hard to keep up. One possible remedy is Value-Based Engineering, a method integrating moral frameworks into technical creation from the start. It involves consulting varied actors early and appointing Value Leads to weave social concerns, including clarity, balance, and answerability, into systems.[24] Failure to embrace ethical factors risks growing disparities between technological strides and societal approval, eroding faith and stability.

Public Sentiment and Political Pressure in Democratic and Authoritarian States

The absorption of AI into defense differs greatly between democratic and autocratic nations, with implications for institutional reliability and mass acceptance. Democratic societies face growing scrutiny, judicial disputes, and transparency demands with autonomous weapons. Populations in Germany and the United States have criticized the lack of regulation while highlighting the potential unjust deployment of AI-guided weapons. At the same time, powers, including China and Russia, exploit these advancements to consolidate central government control, enhance anticipatory law enforcement, and optimize armed strategic planning. Although centralized implementation facilitates the rule of law, it indicates a high degree of centralized authority and limited public involvement. Democratic structures must also sustain communal approval by synthesizing defense interests with transparent ethical measures, a mounting challenge as AI capacities exceed regulatory adaptation.

German suspicion toward military AI stems from historical wariness of observation and dictatorial control. Bundeswehr efforts to adopt AI, mainly in scouting and aiding decision-making, have triggered notable public awareness. A 2024 cross‑national survey found that German respondents, like those in the U.S. and China, largely favor autonomous weapons less when informed they pose a higher risk of misidentifying targets, underscoring widespread anxiety about their accident‑prone nature.[25] Advocacy entities involving the German Ethics Council and SWP demand enforceable protocols guaranteeing human dominance over military algorithms.[26] Lawmakers, pressured by this stance, advocate clearer defense buying and stronger adherence to global war legislation. Post-war constitutional safeguards prioritizing individual freedoms now frame AI in defense as a latent hazard if unchecked. Berlin’s imperatives are based on embracing innovation without sacrificing democratic ideals essential to its identity.

In the U.S., merging AI with military functions poses fundamental tensions between the authority of the armed forces and individual protections. The Project Maven initiative and Joint Artificial Intelligence Center (JAIC) have refined AI-powered strikes, combat data interpretation, and choice backing but remain limited in openness.[27] Rights organizations such as the American Civil Liberties Union warn of fading boundaries between army and civic AI, as investigative tools crafted for anti-terror missions shift to internal scrutiny.[28] This split drives bipartisan pressures for binding controls, including compulsory human judgment layers. The U.S. faces the dilemma of preserving its tech edge while upholding democratic values shaping its system.

In China, the incorporation of AI into surveillance and defense mechanisms is propelled by government-driven efforts, including the Sharp Eyes initiative and extensive facial recognition utilization in urban spaces.[29] These expressions are filtered by official moderation, while military AI ventures remain shielded from scrutiny under the pretext of national security and modernization aims. The People’s Liberation Army persists in embedding AI within its command structures, incorporating automated situational awareness and logistics management with assistance from companies such as Baidu and iFlytek.[30]

In Russia, similar dynamics are present, though public sentiment is further constrained by mechanisms that restrict dissent. The Russian military’s AI research, with work undertaken by the Advanced Research Foundation, has focused on autonomous systems based on the Uran-9 and AI-enabled electronic warfare capabilities.[31] The 2022 amendments to Russia’s “foreign agents” law and the expanded role of the Federal Service for Supervision of Communications (Roskomnadzor) have limited the capacity of civil society to monitor or critique these developments.[32] Although some segments of the Russian public have raised concerns about surveillance and militarization, and after domestic deployments of facial recognition systems during political protests,[33] there is little institutional capacity to channel this discontent into policy influence. State-controlled media reinforces a narrative of technological resilience in the face of Western sanctions, further reducing the visibility of public skepticism.

The absence of formalized feedback structures in China and Russia facilitates the swift integration of military AI, although it also carries risks. With limited public scrutiny, issues when deploying autonomous systems may remain unaddressed, possibly weakening domestic stability. Furthermore, secretive decision processes complicate external evaluation and raise questions among observers about long-term reliability. Though public opinion might not generate direct political strain under such conditions, the accumulated consequences of unchecked technological expansion, especially in surveillance and coercion, could disrupt leadership unity unless effectively controlled.

The disparity in managing military AI between these political ideals extends beyond administrative distinctions, becoming a defining feature of international competition. Authoritarian states accelerate implementation with minimal civic or judicial constraints, whereas democracies grapple with tensions over openness, responsibility, and ethics. This asymmetry presents enduring strategic challenges. Democracies must now innovate within frameworks that preserve civil liberties while responding to external threats posed by unconstrained adversaries. However, authoritarian regimes face their own vulnerabilities. Although increasing the speed of preemptive defense, they also heighten the risk of strategic miscalculation, technical failure, and public backlash without institutional mechanisms for course correction.

Strategic Tradeoffs and the Future of Autonomous Warfare

The incorporation of AI into military activities originates from strategic needs prioritizing swiftness, precision, and minimizing human exposure to danger. While these advancements yield benefits, they also introduce new ethical and legal concerns, stimulating discussions on regulation, public responsibility, and apprehensions for an unchecked AI arms buildup. Achieving equilibrium between innovation and restraint requires a system assessing functional benefits alongside jurisdictional responsibilities, institutional checks, and collective attitudes toward algorithmic dominance.

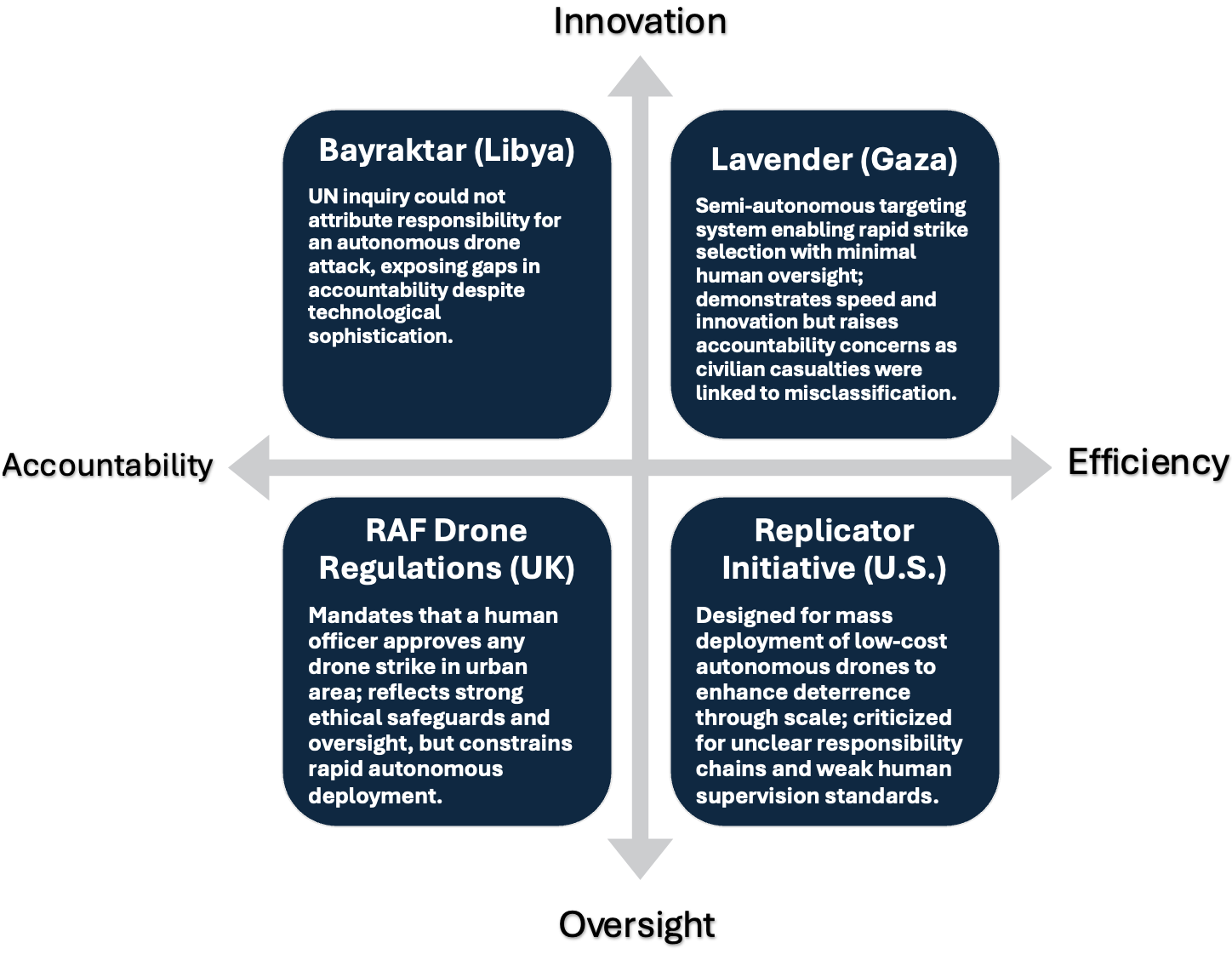

Tools such as Israel’s Lavender, allegedly enabling semi-autonomous target selection in Gaza, have heightened global concerns about delegating lethal choices to machines with limited human involvement.[34] In parallel, the Pentagon’s Replicator Initiative, aimed at the swift deployment of numerous self-directed drones, has faced challenges in its implementation in terms of standards and ill-defined chains of responsibility.[35] This is comparable to the 2019 Turkish Bayraktar drone strike in Libya, where UN investigators could not determine whether the operator, manufacturer, or foreign advisors bore ultimate responsibility.[36] As AI platforms start affecting critical combat determinations, the lack of openness and firm ethical guidelines weakens democratic credibility. To sustain public confidence and adhere to legal standards, armed forces must integrate protective measures guaranteeing that the human aspect remains central, as demonstrated by the UK Royal Air Force’s 2024 rules for drone operations, which require a human officer to approve any strike within urban areas before execution.[37] Neglecting this not only risks strategic missteps but also a gradual decline in human supervision and democratic moral standing.

Figure 2: Strategic Dilemmas in Autonomous Warfare

Author’s creation using Microsoft SmartArt

From a tactical perspective, self-governing machines sharpen effectiveness in disputed sectors, allowing agile responsiveness and prolonged engagement. However, higher efficiency prospects come with significant tradeoffs that strain democratic ideals. The Pentagon’s focus on automation via the Replicator plan, aiming to produce large quantities of cost-efficient AI, highlights the transition of militaries toward deterrent tools.[38] Yet weak policy constraints around human supervision protocols have drawn criticism from activists and defense officials. A 2025 NATO insight series report highlights how rushed implementation, lacking thorough examination, risks formalizing fully automated targeting, which may erode public trust and obscure liability in combat.[39] In addition, the European Parliament resolution of 12 September 2018 urgently called on EU Member States and the European Council “to develop and adopt … a common position on lethal autonomous weapon systems that ensures meaningful human control over the critical functions of weapon systems, including during deployment.”[40] Although armed forces leverage AI for strategic competition, maintaining credibility relies not only on technical prowess but also on a firm commitment to ethical boundaries, openness, and civil protection. Failing to address these precautions with short-term gains from autonomy can undermine societal consent and strategic balance.

A prominent issue in ethics centers on the idea of meaningful human oversight. Surveillance technologies and decision-making aids tend to find broad acceptance, whereas entirely independent mechanisms initiating fatal measures provoke disagreement. International humanitarian law underscores the necessity of human logic when applying standards such as distinction and proportionality, yet AI platforms fall short in clear reasoning beyond their conclusions, clouding responsibility. Legal problems emerge whenever AI wrongly assesses a danger and targets civilians. Military research in AI, frequently subjected to secrecy, has indirectly contributed to public estrangement and weakened trust. Promoting greater transparency, input from communities, and awareness initiatives are key to realigning strategy and popular attitudes. Neglecting such approaches risks broadening the divide between technological progress and acceptance by society.

Efforts to regulate military AI remain fragmented. Although discussions have taken place within the UN framework, mainly under the CCW, a binding agreement has yet to be secured. Based on the CCW’s consensus-based approach, the UN General Assembly voted in 2024 to begin formal negotiations on a treaty emphasizing AI in warfare, with António Guterres advocating for concrete rules by 2026.[41] Other actions, like the U.S.-led Political Declaration on Responsible Military Use of AI and Autonomy, endorsed by over 30 nations, represent steps toward establishing guiding norms.[42] Yet, without enforcement, these efforts offer limited restraint.

Looking forward, the progression of self-sufficient combat presents challenging dilemmas regarding future military judgment, responsibility, and moral governance. As AI tools improve in independently recognizing, monitoring, and engaging threats, the likelihood of machines executing deadly measures with little or no human input becomes more conceivable. The Pentagon’s developing strategy on algorithmic combat and battlefield autonomy, illustrated in the JAIC project, reveals a move toward quicker, fragmented engagements. However, pace should not override discernment. In the absence of stringent standards and incorporated moral limitations, self-regulating platforms could react to unclear inputs erratically, particularly in high-pressure situations where every instant matters. Public apprehension is already rising; a 2023 Ipsos survey indicated 61% of international participants reject utilizing autonomous arms without human involvement.[43] Future legitimacy will rely on unambiguous restrictions protecting human influence, notably in critical lethal determinations. As geopolitical competitors accelerate the deployment of independent mechanisms, democratic nations confront a pivotal decision: whether to pioneer with moderation and systemic accountability or risk creating a gap between technological development and public acceptance.

Addressing these concerns calls for early moral anticipation and systematic accountability since conception. Integrating ethical functions directly within military-focused AI tools remains significant. Identifying the proper structure helps to evade scenarios where personnel overly depend on artificial assistance or delay intervention. Educating armed forces to assess algorithmic dependability can enhance judgments while preserving accountability. Cooperative action represents another essential element. Regional alliances synchronize protective blueprints for AI, promoting shared customs. Knowledge exchange platforms combine technical, strategic, and scholarly insights to sustain advancement without abandoning oversight. Collaborative endeavors and unified benchmarks help lessen inconsistency in governance.

Conclusion

The incorporation of artificial intellect into armed frameworks has created tactical benefits along with serious ethical complications. This research illustrates that even though such technology permits swift judgment calls, upgraded monitoring, and diminished troop vulnerability, it also weakens responsibility, reduces human supervision in fatal choices, and heightens civil distrust. Data from clashes in Gaza and Ukraine indicate that faulty categorizations, breakdowns in mechanisms, and ambiguous reasoning processes produce negative outcomes, intensifying disapproval from advocacy groups, government leaders, and global entities. In democratic societies, where public faith determines legal authority, such pushback affects defense discussions, whereas dictatorial governments persist in advancing computerized weaponization with minimal transparency.

Considering current military guidelines, technological innovation cannot progress separately from communal standards. Popular attitudes have shifted from marginal to crucial for upholding integrity in representative administrations. Armed forces’ reliance on artificial systems without ethical protections risks harming both functional lawfulness and governance. Governments should prioritize human-centric approaches throughout the development of military AI. This involves establishing oversight systems that can be enforced, maintaining clarity in how systems are used, and ensuring actions follow humanitarian regulations. Collaboration between nations, via formal agreements, engineering guided by ethical standards, and consistent methods for holding parties responsible will be crucial in addressing regulatory shortcomings. Including public input is necessary to ensure that advancements in defense reflect shared ideals instead of compromising them.

The trajectory of autonomous conflict will depend less on technical advancements and more on the willingness of nations to adhere to moral obligations. Reinforcing accountability and promoting confidence are vital for ensuring military AI supports rather than erodes the foundations of civil society.

[1] Charukeshi Bhatt and Tejas Bharadwaj, “Understanding the Global Debate on Lethal Autonomous Weapons Systems: An Indian Perspective,” Carnegie India, August 30, 2024, https://carnegieindia.org/2024/08/30/understanding-global-debate-on-lethal-autonomous-weapons-systems-indian-perspective.

[2] Raluca Csernatoni, “Can Democracy Survive the Disruptive Power of AI?,” Carnegie Europe, December 18, 2024, https://carnegieeurope.eu/2024/12/18/can-democracy-survive-disruptive-power-of-ai.

[3] “Does AI Really Reduce Casualties in War? ‘That’s Highly Questionable,’ Says Lauren Gould,” Utrecht University News, January 27, 2025, https://www.uu.nl/en/news/does-ai-really-reduce-casualties-in-war-thats-highly-questionable-says-lauren-gould.

[4] “Killer Robots: Survey Shows Opposition Remains Strong,” Human Rights Watch, February 2, 2021, https://www.hrw.org/news/2021/02/02/killer-robots-survey-shows-opposition-remains-strong.

[5] Noah Sylvia, “The Israel Defense Forces’ Use of AI in Gaza: A Case of Misplaced Purpose,” Royal United Services Institute (RUSI), July 4, 2024, https://rusi.org/explore-our-research/publications/commentary/israel-defense-forces-use-ai-gaza-case-misplaced-purpose.

[6] U.S. Department of Defense, Office of Inspector General, Evaluation of Contract Monitoring and Management for Project Maven (DODIG-2022-049). January 6, 2022. Publicly released January 10, 2022, https://www.dodig.mil/reports.html/Article/2890967/evaluation-of-contract-monitoring-and-management-for-project-maven-dodig-2022-049.

[7] Amr Yossef, “Cassandra’s Shadows: Analyzing the Intelligence Failures of October 7th,” Future for Advanced Research and Studies, May 28, 2025, https://futureuae.com/en-US/Mainpage/Item/10237/cassandras-shadows-analyzing-the-intelligence-failures-of-october-7th.

[8] Anthony H. Cordesman with George Sullivan and William D. Sullivan, Lessons of the 2006 Israeli-Hezbollah War, (Washington, DC: Center for Strategic and International Studies, 2007), https://csis-website-prod.s3.amazonaws.com/s3fs-public/legacy_files/files/publication/120720_Cordesman_LessonsIsraeliHezbollah.pdf.

[9] C. Todd Lopez, “DoD: August 29 Strike in Kabul ‘Tragic Mistake,’ Kills 10 Civilians,” U.S. Department of Defense News, September 17, 2021, https://www.defense.gov/News/News-Stories/Article/Article/2780257/dod-august-29-strike-in-kabul-tragic-mistake-kills-10-civilians/.

[10] Forrest E. Morgan, Benjamin Boudreaux, Andrew J. Lohn, Mark Ashby, Christian Curriden, Kelly Klima, and Derek Grossman, Military Applications of Artificial Intelligence: Ethical Concerns in an Uncertain World, (Santa Monica, CA: RAND Corporation, 2020), https://doi.org/10.7249/RR3139-1.

[11] U.S. Department of Defense, Responsible Artificial Intelligence Strategy and Implementation Pathway, prepared by the DoD Responsible AI Working Council in accordance with the memorandum issued by Deputy Secretary of Defense Kathleen Hicks on May 26, 2021, Implementing Responsible Artificial Intelligence in the Department of Defense (Washington, DC: U.S. Department of Defense, June 2022).

[12] Robert Sparrow, “Robots and Respect: Assessing the Case Against Autonomous Weapon Systems,” Ethics & International Affairs 30, no. 1 (2016): 93–116, https://doi.org/10.1017/S0892679415000647.

[13] Rob Sparrow, “Ethics as a Source of Law: The Martens Clause and Autonomous Weapons,” Humanitarian Law & Policy Blog (International Committee of the Red Cross), November 14, 2017, https://blogs.icrc.org/law-and-policy/2017/11/14/ethics-source-law-martens-clause-autonomous-weapons/.

[14] United Nations, “UN and Red Cross Call for Restrictions on Autonomous Weapon Systems to Protect Humanity,” UN News, October 5, 2023, https://news.un.org/en/story/2023/10/1141922.

[15] International Committee of the Red Cross, “Autonomous Weapons: ICRC Urges States to Launch Negotiations for New Legally Binding Rules,” International Committee of the Red Cross, June 5, 2023, https://www.icrc.org/en/document/statement-international-committee-red-cross-icrc-following-meeting-group-governmental.

[16] Jean-Baptiste Jeangène Vilmer, “A French Opinion on the Ethics of Autonomous Weapons,” War on the Rocks, June 2, 2021, https://warontherocks.com/2021/06/the-french-defense-ethics-committees-opinion-on-autonomous-weapons/.

[17] “Use of Automated Targeting System in Gaza,” Stop Killer Robots, December 1, 2023, https://www.stopkillerrobots.org/news/use-of-automated-targeting-system-in-gaza/.

[18] Human Rights Watch, Stopping Killer Robots: Country Positions on Banning Fully Autonomous Weapons and Retaining Human Control, August 10, 2020, https://www.hrw.org/report/2020/08/10/stopping-killer-robots/country-positions-banning-fully-autonomous-weapons-and.

[19] H. Akin Ünver, Artificial Intelligence (AI) and Human Rights: Using AI as a Weapon of Repression and Its Impact on Human Rights, in-depth analysis requested by the European Parliament’s Subcommittee on Human Rights (DROI) (Brussels: Policy Department, Directorate-General for External Policies, European Parliament, May 2024), https://www.europarl.europa.eu/RegData/etudes/IDAN/2024/754450/EXPO_IDA(2024)754450_EN.pdf.

[20] Forrest E. Morgan et al., Military Applications of Artificial Intelligence, 2020.

[21] Hanne Heszlein-Lossius, Yahya Al-Borno, Samar Shaqqoura, Nashwa Skaik, Lasse Melvaer Giil, and Mads F. Gilbert, “Traumatic Amputations Caused by Drone Attacks in the Local Population in Gaza: A Retrospective Cross-Sectional Study,” The Lancet Planetary Health 3, no. 1 (January 2019): e40–e47, https://doi.org/10.1016/S2542-5196(18)30265-1.

[22] Noel E. Sharkey, “The Evitability of Autonomous Robot Warfare,” International Review of the Red Cross 94, no. 886 (Summer 2012): 787–799, https://doi.org/10.1017/S1816383112000732.

[23] United Nations, Human Rights in the Administration of Justice: Report of the Secretary-General. A/79/296. General Assembly, 79th session, August 7, 2024.

[24] Sarah Spiekermann, Value-Based Engineering: A Guide to Building Ethical Technology for Humanity (Berlin: De Gruyter, 2023), https://doi.org/10.1515/9783110793383.

[25] Rosendorf, Ondřej, Michal Smetana, Marek Vranka, and Anja Dahlmann, Mind over Metal: Public Opinion on Autonomous Weapons in the United States, Brazil, Germany, and China (Preprint. November 2024), https://doi.org/10.2139/ssrn.5021966.

[26] Axel Siegemund, “Digital Escalation Potential: How Does AI Operate at the Limits of Reason?” Ethics and Armed Forces, no. 1 (2024): 16–23. https://www.ethikundmilitaer.de/fileadmin/ethics_and_armed_forces/Ethics-and-Armed_Forces-2024-1.pdf.

[27] Billy Mitchell, “Google’s Departure from Project Maven Was a ‘Little Bit of a Canary in a Coal Mine,’” FedScoop, November 5, 2019. https://fedscoop.com/googles-departure-from-project-maven-was-a-little-bit-of-a-canary-in-a-coal-mine/.

[28] “ACLU Warns That Biden-Harris Administration Rules on AI in National Security Lack Key Protections,” ACLU, October 24, 2024, https://www.aclu.org/press-releases/aclu-warns-that-biden-harris-administration-rules-on-ai-in-national-security-lack-key-protections.

[29] Mateus de Oliveira Fornasier and Gustavo Silveira Borges, “The Chinese ‘Sharp Eyes’ System in the Era of Hyper Surveillance: Between State Use and Risks to Privacy,” Revista Brasileira de Políticas Públicas 13, no. 1 (2023): 439–453, https://doi.org/10.5102/rbpp.v13i1.7997.

[30] Jiayu Zhang, “China’s Military Employment of Artificial Intelligence and Its Security Implications,” International Affairs Review, August 16, 2023, https://www.iar-gwu.org/print-archive/blog-post-title-four-xgtap.

[31] Riley Simmons-Edler, Jean Dong, Paul Lushenko, Kanaka Rajan, and Ryan P. Badman, Military AI Needs Technically-Informed Regulation to Safeguard AI Research and Its Applications, arXiv, May 23, 2025, https://doi.org/10.48550/arXiv.2505.18371.

[32] Mariana Katzarova, Situation of Human Rights in the Russian Federation: Report of the Special Rapporteur on the Situation of Human Rights in the Russian Federation, Human Rights Council, Fifty-fourth session, Agenda item 4. A/HRC/54/54, United Nations General Assembly, September 18, 2023.

[33] Giulia Gabrielli, “The Use of Facial Recognition Technologies in the Context of Peaceful Protest: The Risk of Mass Surveillance Practices and the Implications for the Protection of Human Rights,” European Journal of Risk Regulation 16, no. 2 (2025): 514–41, https://doi.org/10.1017/err.2025.26.

[34] Bethan McKernan and Harry Davies, “‘The Machine Did It Coldly’: Israel Used AI to Identify 37,000 Hamas Targets,” The Guardian, April 3, 2024, https://www.theguardian.com/world/2024/apr/03/israel-gaza-ai-database-hamas-airstrikes.

[35] Joseph Clark, “DOD Innovation Official Discusses Progress on Replicator,” U.S. Department of Defense News, December 12, 2024, https://www.defense.gov/News/News-Stories/Article/Article/3919122/dod-innovation-official-discusses-progress-on-replicator/.

[36] Abdullah Bozkurt, “UN Experts Found Turkish Bayraktar Drones in Libya Were Easily Destroyed,” Nordic Monitor, February 21, 2022, https://nordicmonitor.com/2022/02/un-experts-found-turkish-bayraktar-drones-in-libya-were-easily-destroyed/.

[37] UK Ministry of Defence, The Strategic Defence Review 2025 – Making Britain Safer: Secure at Home, Strong Abroad, Policy paper, updated July 8, 2025, GOV.UK. https://www.gov.uk/government/publications/the-strategic-defence-review-2025-making-britain-safer-secure-at-home-strong-abroad.

[38] “Why Replicator Is Critical for the Future of Defense,” Anduril Industries, December 19, 2023, https://www.anduril.com/article/why-replicator-is-critical-for-the-future-of-defense/.

[39] Roderick Parkes, Not Withstanding? An Upbeat Perspective on Societies’ Will to Fight, NDC Insight 5/2025. Rome: NATO Defense College, July 2025. https://www.ndc.nato.int/wp-content/uploads/2025/07/2025_Insight-05.pdf.

[40] Ariel Conn, “European Parliament Passes Resolution Supporting a Ban on Killer Robots,” Future of Life Institute, September 14, 2018, https://futureoflife.org/ai/european-parliament-passes-resolution-supporting-a-ban-on-killer-robots/.

[41] United Nations, Lethal Autonomous Weapons Systems: Report of the Secretary-General. A/79/88. General Assembly, Seventy-ninth session, agenda item 98 (ss). July 1, 2024.

[42] Eugene van der Watt, “31 Countries Endorse US Guardrails for Military Use of AI,” DailyAI, November 10, 2023, https://dailyai.com/2023/11/31-countries-endorse-us-guardrails-for-military-use-of-ai/.

[43] Global Survey Highlights Continued Opposition to Fully Autonomous Weapons, Ipsos, February 2, 2021, https://www.ipsos.com/en-us/global-survey-highlights-continued-opposition-fully-autonomous-weapons.