Techno hubris, the dangerous overconfidence in technology’s ability to solve all problems, could lead to catastrophic societal consequences if left unchecked. Several examples illustrate the risks of unchecked techno hubris: The 2018 Cambridge Analytica scandal revealed how misuse of data analytics technology could manipulate and compromise the integrity of political systems, affecting millions of users worldwide. Similarly, the deployment of AI in criminal justice systems has shown how techno hubris can have dire consequences. AI algorithms, intended to predict criminal behaviour, have often perpetuated and even amplified existing biases, leading to unfair treatment and wrongful incarcerations. Economically, the misuse of AI in financial markets has led to significant losses. Indeed, the 2010 “Flash Crash” saw the U.S. stock market lose nearly US$1 trillion in value within minutes due to automated trading algorithms. These automated trading systems were designed to maximize efficiency and profit, but they lacked the moral foresight to prevent market instability and protect investors from significant financial losses. The 2023 MOVEit transfer data breach, impacting over 60 million individuals, highlights the overconfidence in the software’s security capabilities, revealing the vulnerability of its digital infrastructure to cyberattacks.[1] Additionally, the rise of AI-generated deepfakes has sparked significant controversy, with instances of these realistic fabrications being used to manipulate public opinion and defraud individuals.

Emerging technologies like quantum computing and blockchain also present new challenges. Quantum computing, with its potential to break current cryptographic methods, poses a significant threat to data security. Blockchain, while promising in its decentralized approach, could be susceptible to quantum attacks, necessitating the development of quantum-resistant cryptographic techniques. These examples underscore the necessity of “techno-wisdom”—a balanced approach that integrates ethical foresight with technological development. This article explores the urgent need to balance technological advancements with rigorous ethical, social, and environmental scrutiny to avert looming disasters.

Balancing Technological Innovation and Human Values

How can we navigate the intricate relationship between technological innovation and human values? This complex question demands a profound understanding of potential risks and ethical considerations.

Lewis Mumford, an influential American historian and philosopher of technology, warned about the dehumanizing aspects of large-scale technological systems in his analysis of the “megamachine”.[2] This concept is vividly illustrated in Charlie Chaplin’s 1936 film “Modern Times,” where the relentless pace of factory machinery reduces the protagonist to a mere cog in the machine. Mumford’s critique underscores the existential risk of losing sight of human and natural values in the relentless drive for efficiency. Building on this, Martin Heidegger, a renowned German philosopher, introduced the concept of “Enframing” (Gestell), which describes how technological advancement can shape human experience by viewing nature and human beings as resources to be optimized and controlled.[3] Heidegger’s insights expose the deep and transformative impact of technology on how we perceive the world, compelling us to confront the ethical consequences of viewing everything as mere tools for exploitation.

Figure 1: Charlie Chaplin in the Film ‘Modern Times’ (1936)[4]

Source: Britannica, https://www.britannica.com/topic/Modern-Times-film.

Jacques Ellul, a prominent French philosopher and sociologist, further elaborates on this by discussing “technique”—the set of efficient methods used in all areas of life.[5] He argued that ‘technique’ becomes an autonomous force that shapes society, often overshadowing human values and ethics. This perspective highlights the danger of allowing technological efficiency to dominate our decision-making processes, leading to a society where human welfare is secondary to technological progress. Langdon Winner, an American political theorist, emphasizes that technological artifacts embody specific forms of power and authority, which can shape social order in profound ways.[6] Winner’s critique of techno hubris illustrates how unchecked technological advancements can reinforce existing power structures and create new forms of inequality.

Najwa Aaraj, the Chief Executive Officer at the Technology Innovation Institute (TII) in Abu Dhabi, champions the integration of ethical considerations with technological advancements. Her work on the convergence of artificial intelligence (AI) and sustainability emphasizes the importance of addressing global challenges like climate change and resource depletion. By advocating for a balanced approach that harmonizes innovation with ethical foresight, Aaraj implicitly critiques the overconfidence in technology’s ability to solve all problems without considering broader societal impacts—a key aspect of techno-hubris.

These perspectives collectively advocate for a critical examination of the role of technology in society. They urge us to shift towards “techno-wisdom”, where technological innovation is harmonized with ethical, social, and environmental considerations, ensuring that human values are not overshadowed by the relentless pursuit of efficiency and control.

The Role of Tech Giants and how they are shaping our society

How do the world’s largest tech companies shape our society, and what are the risks of their unchecked power? In this contemporary landscape, monopolistic conglomerates such as Alphabet, Amazon, Apple, Meta, Samsung, Huawei, and IBM (to name a few) wield exceptional influence over global technological development and societal norms. These tech giants not only drive innovation but also weave themselves into the very fabric of our daily lives, transforming how we communicate, shop, work, learn, access healthcare, and even govern ourselves. Their reach extends beyond mere convenience, fundamentally reshaping our social structures, economies, and personal interactions.

Alphabet, the parent company of Google, exemplifies the double-edged nature of technological prowess. Google’s search engine processes over 3.5 billion searches per day, providing unparalleled access to information.[7] However, there is extensive public and government debate about data privacy and the potential influence of certain search algorithms that “in theory” promote certain viewpoints or products, which underscore the importance of oversight.[8] Similarly, Amazon’s vast e-commerce empire, which accounted for 38% of U.S. online retail sales in 2023, has transformed retail.[9] However, its labour practices and influence over market dynamics have led to public discourse (internally and externally) about worker rights and fair competition.

Apple and Meta exemplify the complexities of techno hubris. Apple’s ecosystem of devices and services has set remarkable standards for innovation and user experience, including data privacy, with over 1.5 billion active devices reported worldwide in 2023. However, its stringent control over the App Store and proprietary technologies raises significant antitrust concerns. Meta’s social media platforms, while connecting billions globally, have been implicated in spreading misinformation, violating user privacy, and influencing political outcomes, notably in the Cambridge Analytica scandal affecting 87 million users.[10] A study by the University of Southern California further revealed that the structure of social media platforms, which rewards frequent sharing, significantly contributes to the spread of misinformation. This misinformation can have profound societal impacts, including undermining public trust and influencing elections.[11]

Elon Musk’s influence on blockchain and alleged stock market manipulation further exemplifies the risks of unchecked technological power. Musk has had a significant impact on the cryptocurrency market, particularly through his tweets, causing substantial price fluctuations in cryptocurrencies like Bitcoin and Dogecoin. For instance, a single tweet from Musk can lead to a significant increase in trading volume and price, demonstrating the outsized influence he wields over the market. This influence underscores the volatility and susceptibility of the cryptocurrency market to external factors, highlighting the need for regulatory oversight to ensure market stability and protect investors.

Musk’s influence extends beyond cryptocurrencies to the stock market. His tweets have been known to cause significant movements in stock prices, sometimes leading to accusations of alleged market manipulation. For example, his 2018 tweet about taking Tesla private at US$420 per share led to a substantial increase in Tesla’s stock price and subsequent legal action by the United States Securities Exchange Commission (SEC) for allegedly misleading investors. This incident illustrates the potential for influential figures to disrupt financial markets and the importance of regulatory frameworks to prevent market manipulation and protect investors.

These examples underscore the necessity of “techno-wisdom”—a balanced approach that integrates ethical foresight with technological development. As these tech giants continue to expand their reach, it is imperative to establish robust regulatory frameworks that promote transparency, accountability, and the equitable distribution of technological benefits. Only by addressing the ethical, social, and environmental implications of their innovations can we harness the full potential of technology while safeguarding human values and societal well-being.

Can Stakeholders Navigate the Perils of Techno Hubris?

How do we navigate the perils of techno hubris, where innovation outpaces wisdom? The intricate and pivotal role of stakeholders in regulating technology and advocating responsible innovation is vital. Policymakers are at the heart of this effort, crafting regulations that balance innovation with ethical considerations. For instance, the European Union’s (EU) AI Act, which came into force in August 2024, introduces a comprehensive legal framework to promote a human-centric approach to AI.[12] This legislation addresses potential risks to citizens’ health, safety, and fundamental rights, setting clear requirements for AI developers and those that deploy and use these systems, which will have far-reaching consequences beyond the EU. Similarly, in the United States (U.S.), the National Artificial Intelligence Initiative Act of 2020 established advisory committees and interagency bodies to support AI policy development and ensure ethical AI deployment.[13]

In the UAE, the National Artificial Intelligence Strategy 2031 aims to position the country as a global leader in AI by fostering innovation while ensuring ethical standards are met.[14] The UAE has also established the UAE Council for Artificial Intelligence and Digital Transactions to oversee AI integration and promote responsible use.[15] In Saudi Arabia, the Saudi Data and Artificial Intelligence Authority (SDAIA) plays a crucial role in developing AI policies and frameworks that align with the country’s Vision 2030 goals.[16] These legislative and advisory bodies exemplify the global and Middle East efforts to regulate AI and support policymakers in promoting responsible innovation.

Technologists, however, often express concerns that excessive regulation might stifle innovation. They argue that stringent regulations can create barriers to entry, slow down the pace of technological advancements, and increase compliance costs. A study by MIT Sloan found that firms are less likely to innovate if increasing their headcount leads to additional regulatory oversight.[17] As John Van Reenen, a digital fellow at the MIT Initiative on the Digital Economy, notes, “The prospect of regulatory costs discourages innovation.”[18] Balancing regulation with the need to foster a dynamic and innovative tech environment remains a critical challenge for policymakers worldwide.

Technologists bear the responsibility of integrating ethical frameworks into the development and deployment of new technologies. They must be vigilant about the potential biases and unintended consequences of their innovations. For instance, the deployment of AI in criminal justice systems has sometimes perpetuated biases, leading to unfair treatment and wrongful incarcerations. A study by ProPublica revealed that an AI system used in the U.S. to predict criminal behaviour was biased against African Americans, demonstrating the critical need for ethical design and continuous monitoring.[19] Technologists should advocate for and adhere to ethical standards that prioritize human welfare and societal good over mere technical efficiency.

Corporate responsibility also plays a crucial role in promoting ethical AI. Tech companies like Google and Microsoft have taken significant steps to self-regulate and ensure their AI technologies are developed and deployed responsibly. Google’s AI Principles, established in 2018, outline commitments to avoid creating or reinforcing bias and uphold high standards of scientific excellence. Similarly, Microsoft’s Responsible AI Standard provides actionable guidance for building AI systems that are fair, reliable, and safe, emphasizing transparency and accountability. IBM, Meta, Amazon, and Apple have similar initiatives highlighting the industry’s recognition of the need for ethical oversight and the proactive measures being taken to address potential risks. However, the actual implementation and impact of these initiatives are varying. It’s essential for these tech giants to move beyond just setting guidelines and ensure that these principles are effectively integrated into their operations and decision-making processes.

Public engagement is equally essential in fostering a culture of responsible innovation. Raising public awareness about the implications of new technologies can empower individuals to make informed decisions and participate in meaningful dialogues about technological governance. For example, initiatives like the “AI for Good” Global Summit by the United Nations (UN) bring together diverse stakeholders to discuss the ethical and societal impacts of AI.[20] Engaging the public helps ensure that technological advancements align with societal values and needs. This collective approach, involving policymakers, technologists, and the public, is vital for achieving “techno-wisdom”—a balanced integration of technological progress with ethical, social, and environmental considerations.

Future Outlook

The chasm between our capabilities and ethical frameworks is set to widen as we forge ahead with unprecedented technological innovations. This growing disparity poses significant risks, particularly the potential misuse of powerful technologies like AI and quantum computing. The future is marked by heightened debates and intensified regulatory efforts aimed at bridging this critical gap.

Without proper oversight, AI could significantly worsen social inequalities and lead to a wide range of unintended consequences. Bostrom, a prominent Swedish philosopher who is a seminal author in the field of AI, highlights the existential risks posed by superintelligent AI, which could potentially surpass human control and decision-making capabilities.[21] Likewise, Floridi, a Professor of Philosophy and Ethics of Information at the University of Oxford, explores how the digital revolution is rapidly transforming human reality, outpacing our ability to manage its profound impacts responsibly.[22] This includes current challenges such as data privacy breaches, the spread of misinformation, and the erosion of personal autonomy in the face of pervasive digital surveillance.

Further studies highlight specific ethical concerns. Steven Umbrello, a researcher at the Institute for Ethics and Emerging Technologies (IEEE) affiliated with the University of Massachusetts Boston, emphasizes the potential for quantum computing to disrupt current encryption methods, posing significant privacy and security risks.[23] Luca M. Possati, a scholar at Delft University of Technology, stresses how quantum technologies introduce new ethical dilemmas, such as the quantum divide, where access to advanced quantum technologies could create significant disparities between different populations.[24] Additionally, the ethical implications of AI and quantum computing, including issues around data privacy and security, are increasingly recognized as critical areas for responsible development and deployment.

These insights highlight the necessity of proactive measures to ensure that technological advancements are beneficial and equitable. The literature suggests that interdisciplinary collaboration and robust ethical guidelines are essential to navigate the complexities of emerging technologies.

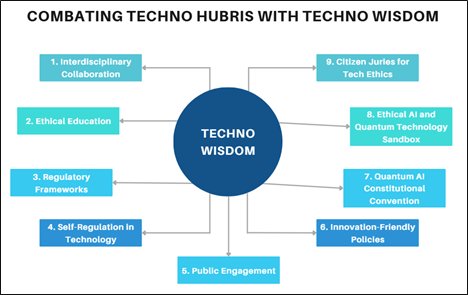

Figure 2: Combating Techno Hubris with Techno Wisdom

Source: The Author

Recommendations for Combating Techno-Hubris with Techno Wisdom

- Interdisciplinary Collaboration: Encourage partnerships between technologists, ethicists, and policymakers to create comprehensive frameworks that address the ethical implications of new technologies. This approach can help anticipate and mitigate potential risks before they become unmanageable. For example, the European Union’s AI Act aims to regulate AI technologies while promoting innovation by classifying AI applications based on their risk levels. Similarly, Singapore has established the Advisory Council on the Ethical Use of AI and Data to guide the responsible development and deployment of AI technologies.

- Ethical Education: Integrate AI ethics into STEM education to prepare future innovators to consider the broader impacts of their work. This can foster a culture of responsibility and foresight in technological development. Countries like Germany have already implemented educational programs that emphasize ethical considerations in technology development. Additionally, the UK has introduced AI ethics modules in university curricula to ensure that future developers are well-versed in ethical standards.

- Regulatory Frameworks: Develop and enforce regulations that ensure transparency, accountability, and fairness in the deployment of new technologies. This includes creating standards for data privacy, AI ethics, and quantum computing safety. The U.S., for instance, has established the National Quantum Initiative Act to promote quantum information science and technology while ensuring ethical standards are maintained. Similarly, Japan’s Quantum Technology Innovation Strategy outlines ethical guidelines alongside significant funding for quantum research.

- Self-Regulation in Technology: Self-regulation is a critical component in the ethical deployment of emerging technologies. It involves industries setting their own standards and guidelines to ensure responsible innovation without waiting for external regulatory bodies to impose rules. Successful examples include Google’s AI Principles and the Partnership on AI, which demonstrate how self-regulation can complement formal regulations. However, self-regulation must be paired with external oversight to ensure consistency and accountability across the industry. By integrating self-regulation within regulatory frameworks, we can create a layered approach to governance that combines flexibility with oversight.

- Public Engagement: Promote public awareness and dialogue about the implications of emerging technologies. Informed citizens can advocate for ethical standards and hold developers and policymakers accountable. Canada’s approach to quantum computing includes public consultations and transparent communication strategies to ensure societal benefits are maximized without compromising ethical standards. Additionally, Australia has launched the AI Ethics Framework to engage the public and ensure that AI development aligns with societal values.

- Innovation-Friendly Policies: Implement policies that balance regulation with the need to foster innovation. Japan’s Quantum Technology Innovation Strategy allocates significant funding to quantum research while establishing ethical guidelines to ensure responsible development. This strategy aims to advance technological capabilities without stifling innovation. Similarly, South Korea has introduced the AI National Strategy, which promotes AI development through substantial investments while ensuring ethical considerations are integrated into the innovation process.

- Quantum AI Constitutional Convention: Organize a global convention to establish a “Quantum AI Constitution” that sets clear ethical guidelines and protocols for the deployment of AI and quantum technologies. This convention would bring together international stakeholders to create a comprehensive framework akin to a digital Magna Carta, ensuring that ethical standards are universally adopted and enforced.

- Ethical AI and Quantum Technology Sandbox: Create regulatory sandboxes where companies can test new AI and quantum technologies in a controlled environment. These sandboxes would allow for experimentation and innovation while ensuring that ethical guidelines are strictly followed. The UK’s Financial Conduct Authority has successfully implemented such sandboxes for fintech innovations, providing a model that could be adapted for AI and quantum technologies.

- Citizen Juries for Tech Ethics: Establish citizen juries composed of diverse members of the public to deliberate on the ethical implications of new technologies. These juries would provide recommendations to policymakers and technologists, ensuring that societal values and concerns are integrated into the development process. This approach has been used in various countries to address complex policy issues and could be adapted for technology ethics.

In sum, while the future of technology brims with enormous potential, it demands a harmonious approach that seamlessly integrates ethical considerations at every stage of innovation. By nurturing collaboration, education, regulation, public engagement, and innovation-friendly policies, we can ensure that our technological advancements enrich our society positively.

Endnotes

[1] Carly Page, “MOVEit, the biggest hack of the year, by the numbers,” TechCrunch, August 25, 2023, https://techcrunch.com/2023/08/25/moveit-mass-hack-by-the-numbers/.

[2] Lewis Mumford, The Myth of the Machine: Technics and Human Development, (Harcourt, Brace & World, 1967).

[3] Martin Heidegger, The Question Concerning Technology, (Germany: Garland Publishing, 1954).

[4] Britannica, https://www.britannica.com/topic/Modern-Times-film.

[5] Jacques Ellul, The Technological Society, (Toronto: Alfred A Knopf, Inc. and Random House, Inc., 1964), https://archive.org/details/JacquesEllulTheTechnologicalSociety/page/n1/mode/2up.

[6] Langdon Winner, The Whale and the Reactor: A Search for Limits in an Age of High Technology, (Chicago and London: University of Chicago Press, 2020).

[7] In January 2024, Google held a staggering 91.5% of the global search engine market, far surpassing its competitors like Bing (3.5%) and Yandex (1.6%). Maria Webb, “100+ Google Search Statistics You Need to Know in 2024,” Technopedia, January 5, 2024, retrieved from https://www.techopedia.com/google-search-statistics.

[8] Thomas Germain, “Google just updated its algorithm. The Internet will never be the same,” BBC, May 25, 2024, https://www.bbc.com/future/article/20240524-how-googles-new-algorithm-will-shape-your-internet.

[9] Stephanie Chevalier, “Market share of leading retail e-commerce companies in the United States in 2023,” Statistica, May 22, 2024, https://www.statista.com/statistics/274255/market-share-of-the-leading-retailers-in-us-e-commerce/.

[10] Shiona McCallum, “Meta settles Cambridge Analytica scandal case for $725m,” BBC, December 23, 2022, https://www.bbc.com/news/technology-64075067.

[11] Kate Starbird, “I’ve been studying misinformation for a decade — here are the rumours to watch out for on US election day,” Nature, October 24, 2024, https://www.nature.com/articles/d41586-024-03401-6.

[12] European Union, “Artificial Intelligence Act. REGULATION (EU) 2024/1689 OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL,” Official Journal of the European Union, June 13, 2024, https://eur-lex.europa.eu/eli/reg/2024/1689/oj.

[13] Congress.Gov, “H.R.6216 – National Artificial Intelligence Initiative Act of 2020,” March 12, 2020, https://www.congress.gov/bill/116th-congress/house-bill/6216.

[14] United Arab Emirates Minister of State for Artificial Intelligence Office, “UAE National Strategy for Artificial Intelligence 2031,” National Programme for Artificial Intelligence, 2018, https://ai.gov.ae/wp-content/uploads/2021/07/UAE-National-Strategy-for-Artificial-Intelligence-2031.pdf.

[15] UAE Government portal, “Artificial intelligence in government policies,” September 9, 2024, https://u.ae/en/about-the-uae/digital-uae/digital-technology/artificial-intelligence/artificial-intelligence-in-government-policies.

[16] Saudi Data and AI Authority, “Leading the Kingdom Toward Partnership,” https://sdaia.gov.sa/en/default.aspx.

[17] Betsy Vereckey, “Does regulation hurt innovation? This study says yes,” MIT Management Sloan School, June 7, 2023, https://mitsloan.mit.edu/ideas-made-to-matter/does-regulation-hurt-innovation-study-says-yes.

[18] Ibid.

[19] Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner, “Machine Bias: There’s software used across the country to predict future criminals. And it’s biased against blacks,” ProPublica, May 23, 2016, https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

[20] AI for Good., “AI for Good Global Summit,” Geneva, Switzerland. 2024, https://aiforgood.itu.int/summit24/.

[21] Nick Bostrum, Superintelligence: Paths, Dangers, Strategies, (Oxford University Press, 2014) https://fdslive.oup.com/www.oup.com/academic/pdf/13/9780198739838.pdf.

[22] Luciano Floridi, The Forth Revolution: How the Infosphere is Reshaping Human Reality, (Oxford University Press, 2014)

[23] Steven Umbrello, “Ethics of Quantum Technologies: A Scoping Review,” International Journal of Applied Philosophy 37, no. 2 (Fall 2023): 179-205, https://doi.org/10.5840/ijap202448201

[24] Luca M. Possati, “Ethics of Quantum Computing: an Outline,” Philosophy & Technology 36 (2023): 1-21, https://link.springer.com/article/10.1007/s13347-023-00651-6.