AI Generated Image / DALL-E

In recent years, the remarkable growth of Artificial Intelligence (AI) and its escalating role in our society have become evident. This growth is exemplified by the public release of ChatGPT, a large language model, in March 2023 and is further supported by Deloitte’s latest dossier on AI usage in government and public sectors, showing how it is increasingly becoming an integral part of government and public sector processes.[1] Along with the rapid digitalization of government entities and private businesses, this expansion has involved the general public as well, enhancing opportunities and productivity for individuals and businesses. However, this increasing exposure has also translated into more risks of exploitation and manipulation.[2] AI represents an exciting new frontier in technology with the potential to revolutionize access to information and interactions with the world, particularly online. Simultaneously, though, it presents structural weaknesses that, combined with gaps in academic understanding due to its novel nature, highlight the urgency of addressing such points to face future threats with an established framework.

This sense of urgency is further supported by the worryingly growing trends of cyberwarfare and cyberterrorism, which are becoming more and more crucial to actual war on the ground, as we are witnessing in current events like the Russo-Ukrainian conflict, where the war through cyberspace has extended well beyond the territorial borders of the interested countries and is starting to impact real developments on the ground through the use of AI-powered drones and targeting systems.[3] This means that without proper management and foresight, these risks could have catastrophic consequences for any country regardless of its physical distance. This paper explores the concept of “AI pollution”, a term coined to define a potential strategy that exploits a critical vulnerability in information input in contemporary AI systems. Through this analysis, we will seek to understand how terrorist groups and enemy governments might exploit such vulnerability to weaponize AI against government infrastructure, private businesses, and the public.

This paper will also use illustrative examples to demonstrate how deep the potential risks of AI pollution are in the very tissue of countries, potentially leading to individual radicalization, and outlining these structural risks using hypothetical and real scenarios. Due to the extremely interdisciplinary and complex nature of this issue, this insight focuses on one aspect of AI Safety, namely AI Alignment, in relation to the concept of Cognitive Security (CogSec), within the context of Information Warfare.[4] It offers a focused and comprehensible analysis of this particular risk, examining the implications of this vulnerability in current AI models and discussing how AI weaponization through data pollution could profoundly affect society and global security.

The paper also addresses the significant risks these weaknesses pose to national security, defining a paradigm and suggesting potential solutions for a type of warfare where “speed” is the decisive factor. To address these research questions and points using this approach, the paper is structured into five parts. The first part defines and explains the concept of “AI pollution” and places it within the broader context of information warfare. The second part evaluates the extent of AI’s integration into our society, from complex government processes to the daily lives of individuals, to assess the potential impact and range of adversarial attacks aimed at AI infrastructure. The third part explores the relationship between AI and the Internet, such as in the case of Bing Chat, determining the opportunity window for antagonistic actors to manipulate widely used AI systems. The fourth part examines existing Internet manipulation tools, like bots, and employs real-life examples to evaluate their potential to manipulate AI for malicious purposes. The fifth part reviews countermeasures implemented by governments such as the recently discussed EU AI Act and, based on previous findings, proposes a new theoretical framework and approach to address these issues and suggests potential solutions.[5]

Defining AI Pollution within the context of information warfare

As we venture into the era of digitalization, we encounter a significant shift in the way we manage the complex systems that govern our administrations, enterprises, and personal affairs. Recently, AI has become a pivotal element in this evolution.[6] However, upon examining this phenomenon, we have identified several structural vulnerabilities in the data dependency of current AI models: vulnerabilities where AI becomes both the victim and the perpetrator. This situation involves the widespread dissemination of tainted or deliberately misleading information across the Internet and cyberspace. The aim is not merely to sway online users for short(er) objectives, as witnessed thus far, but to undermine the foundational systems that underpin our economies and governments. These are the very systems that we increasingly depend on in our daily lives for tasks such as aiding with work or swiftly processing and summarizing data.[7]

From this angle, AI is a victim, processing and reacting to external inputs and data. For instance, modern tools like the latest Bing Chat and its counterparts are now capable of surfing the Internet, partially basing their responses on the information they unearth online. This exposes them to a relentless stream of new data. While these models are trained to mitigate the spread of misinformation to a degree, they are not completely immune to false narratives and traps set by more advanced AI programs.[8] To elucidate, AI typically approaches problem-solving through iterative attempts, persevering in a direction that garners positive reinforcement, a method known as gradient descent.[9] When afforded greater autonomy to achieve its goals, an AI can develop innovative strategies and discover more effective routes, often outperforming models confined to specific algorithms or pathways.

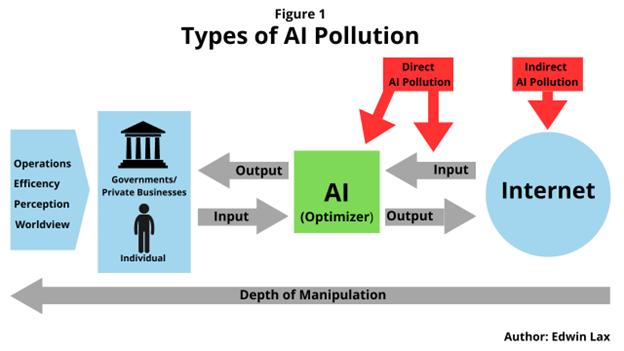

Herein lies the hazard: an AI with fewer constraints, capable of outwitting those deployed by private firms, governments, and the populace, can compromise them through the direct or indirect introduction of spurious data, or by contaminating the Internet with counterfeit sites and pages. Regarding the latter, this contamination is strategically crafted to deceive specific AI models by altering the digital landscape, inducing what is technically termed a “distributional shift”.[10] Thus, we introduce the concept of “AI pollution”, a term coined to characterize the degradation of information integrity, whether through direct incursion or a more insidious contamination of the broader web, with the express purpose of manipulating AI models, and thereby influencing their users and the systems they facilitate.

Source: Edwin Lax, TRENDS Research & Advisory

To better contextualize this new concept, consider real-life parallels, such as CBRN warfare. Much like how we handle CBRN contamination — by managing and isolating contaminated elements to preserve our integrity and health — we must address AI pollution with a similar mindset.[11] The difference here is that we are not only concerned with the contamination of our bodies and physical health but also with our minds, worldviews, values, national and private infrastructure, and even the very essence of our governments and countries. This illustrates the gravity of what is at stake and the potentially devastating scope of this new type of warfare. AI pollution should be viewed not merely as the corruption of the cyber dimension or information itself but as a multidimensional contamination. This can range from being as subtle as a terrorist group specifically targeting less monitored infrastructure and cyberspaces to enlarge its pool of potential recruits, to as overt and destructive as a hostile government launching a direct attack on governmental and private cyber infrastructure.[12]

From this, we can identify two types of AI pollution. The first, which we call “Indirect AI Pollution,” requires fewer resources, as it aims to pollute the less monitored and thus more vulnerable expanses of the Internet. The idea is to indirectly alter the AI’s output for the end-user, causing a malicious misalignment. This might be as blatant as disseminating false information to manipulate the AI or as nuanced as presenting seemingly accurate information in a manner or language that implies otherwise, causing the end-user to question their assumptions and leading them towards a distorted perception. This distortion can create a fertile ground for radicalization and terrorist group recruitment. While this may not seem effective at the individual level, on a larger scale, the likelihood that some individuals will be manipulated and radicalized increases significantly, especially considering that AI implementation reduces the costs and effort required, making it even more probable and dangerous.

Conversely, an actor with more resources, such as an enemy government or a well-funded organization, could pursue a more direct and effective approach. This could involve targeting and manipulating the flow of information between the AI and the Internet or directly targeting the AI software itself. Although riskier and more conspicuous, this method has the potential to cause far greater damage, even if the window of opportunity is narrow and the attack is more easily detectable, as it targets the core AI infrastructure of a government or private enterprise. From this, we observe various strategies and approaches that can be utilized for an attack. The choice will depend on the objective, target, resources, and approach of the individual, group, organization, or government intent on inflicting harm. However, all these methods share a common denominator: they exploit AI’s growing data dependency and its interconnection with the wider Internet to attack the users or entities that rely on it.[13]

AI integration in society: assessing the potential impact

To effectively understand the potential impact and reach of adversarial attacks, it is essential to first consider the extent of AI integration within our society. This analysis is crucial for comprehending the magnitude of impact such targeted attacks can have on governments, private companies, and individuals. A prime example is the increasing trend of government agencies adopting AI for a variety of purposes, including public services and national security. These applications often necessitate some level of connectivity to the broader Internet, for instance, to access information for customer inquiries.[14] However, this exposes them to vulnerabilities, as evidenced by Microsoft’s AI-enabled chatbot, Tay, which was quickly corrupted by the data it was designed to mimic.[15]

This incident highlights the susceptibility of AI systems to corruption or adversarial attacks when exposed to the Internet, even if the exposure is carefully calibrated. Such attacks can affect critical areas like data privacy, security, and judicial processes, including facial recognition in court cases. Governments worldwide are integrating AI into their operations to enhance efficiency, decision-making, and service delivery. In the realm of Public Safety and Law Enforcement, AI is employed to analyze vast amounts of data from multiple sources, as seen in the UK’s National Data Analytics Solution (NDAS), which extends to surveillance cameras and databases to detect potential criminal activity.[16] Predictive policing tools in some cities use AI to identify crime hotspots and efficiently allocate police resources. In Healthcare Administration, AI’s role includes analyzing data to pinpoint disease outbreaks, manage patient data, and identify patients at risk of chronic diseases, aiding in public health response planning and management.

This application was detailed in a Phillips article on AI in healthcare.[17] AI’s reach extends further into Transportation and Traffic Management, Administrative Services, Environmental Monitoring, Education, and Social Services within the public sector. Turning to the private sector, we observe a similar heavy reliance on AI, particularly in cybersecurity, data analysis, and customer interactions.[18] Here, adversarial attacks could target anything from machine learning models in commercial systems to more complex machine vision tasks, leading to misclassifications and causing operational and security issues. Individuals also increasingly interact with AI in various forms, such as self-driving cars and facial recognition systems. Adversarial attacks in these domains can directly impact safety, as demonstrated by incidents involving manipulated self-driving car systems or deceived facial recognition technology.[19]

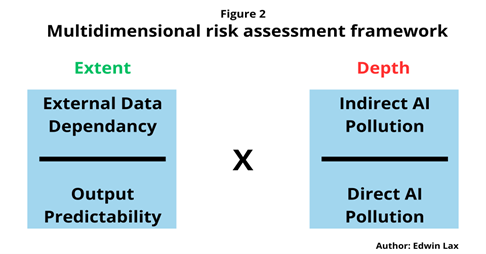

Source: Edwin Lax / TRENDS Research & Advisory

A key observation from these examples is the growing use of AI not only for data processing and optimization but also for predictive purposes, aimed at enhancing productivity. This predictive aspect is particularly risky due to the uncertain nature of forecasting, creating opportunities for manipulation. AI used in risk forecasting and investment planning carries a higher manipulation risk, posing greater damage potential compared to AI used for data processing, which can be more easily verified against real-life feedback.[20] This leads to the conclusion that strategies for AI safety against adversarial attacks must be tailored to the AI’s specific role within an organization. The risk profile of an AI system depends on two main aspects: its external data dependency, and the uncertainty and instability of its output.

For instance, a customer service AI heavily reliant on Internet data is more vulnerable to indirect AI Pollution compared to a data-segregated in-house data analysis AI software, operating on a controlled, pre-validated database. The level of output predictability also determines the manipulation risk, whether through direct or indirect means. Considering these factors, a preventive strategy against AI manipulation must evolve beyond data and model training to acknowledge the varying risk profiles based on data interaction and operational predictability. This approach should balance the likelihood of direct versus indirect AI Pollution in relation to these aspects. Only with such a nuanced understanding can we begin to fully grasp the extent and potential damage of adversarial AI attacks, from a perspective where the impact of AI is becoming increasingly multidimensional beyond cyberspace.

AI and Internet dynamics: defining windows of opportunity for manipulation

The next step in our analysis of AI safety involves defining the “window of opportunity” for potential manipulation. To accomplish this, we can draw insights from real-life examples, though it’s crucial to acknowledge that the risks and approaches will vary depending on the individual AI software and its specific use. Nevertheless, understanding the relationship between an AI’s exposure to external data and its operational framework is essential for a comprehensive understanding of the risks and potential strategies. In considering real-life examples such as Bing Chat and the previously mentioned Chatbot Tay as case studies. A comparative analysis of Bing Chat and Chatbot Tay reveals significant advancements in the implementation of safeguard measures against manipulation.[21] These measures can be broadly categorized into two main areas: Input Filtering and Contextualization. Input Filtering includes Keyword and Phrase Blocking and Malicious Pattern Recognition. Contextualization, on the other hand, encompasses Understanding Intent and Sentiment, along with Context Tracking.[22]

Although these measures may appear robust at first glance, it’s important to consider that they have been developed within stringent regulatory frameworks. This potentially places them at a disadvantage compared to other software systems that may operate with fewer restrictions and thus enjoy a broader scope for innovation and application. This leads to the second critical observation: the safeguards in place are primarily designed to counteract human-driven manipulation, as opposed to threats posed by other AI systems. This focus is a direct response to the nature and frequency of the threats observed in the digital environment up to this point. However, this creates a significant and potentially hazardous gap. With the rapidly accelerating pace of AI development, the risk of AI systems being manipulated by other advanced AI technologies is becoming increasingly significant. In a future where the manipulator is not a human but an AI, capable of developing and implementing new strategies to attack or manipulate other AI systems, the limitations of currently popular AI software become glaringly apparent.

The window of opportunity for manipulation often lies in subtle biases within the text, a challenge even for advanced AI, which might struggle to discern nuanced biases, especially when the information is technically accurate or cleverly presented to resemble credible sources. Additionally, in the realm of contextualization, there remains a significant gap compared to human judgment. The diversity of information sources from which systems like Bing Chat draw, a vast array of Internet sources, presents another substantial window of opportunity for what can be termed as “indirect AI pollution”. This is due to the data dependency and output unpredictability inherent in these modern software systems, which paradoxically increase the risk despite the presence of advanced safeguards. The sheer volume and diversity of data make it challenging to always ensure complete accuracy and unbiased content.

Furthermore, the dependence on training data, as compared to more autonomous systems, combined with the evolving techniques of misinformation, underscores the limitations of current AI safeguards in relation to external inputs.[23] This is the window of opportunity that urgently needs addressing. The approach should not only be technical and human-focused but should also consider scenarios such as the limitations posed by the safeguards themselves in relation to an adversarial AI. The real challenge and direction for the development of future safeguards lie in striking the right balance between the limitations of existing safeguards and the rapid advancement in AI capabilities and the recognition of malicious intents. In a future where AI’s popularity and the likelihood of its malicious use are realities, addressing these issues becomes imperative to keep pace with the escalating threat of AI warfare. It is essential to anticipate and prepare for this eventuality, ensuring that our AI systems are resilient not just against human manipulation, but also against sophisticated AI-driven threats.

Tools of manipulation: bots and beyond

An essential aspect to consider in the realm of AI is the array of tools currently available that can manipulate both AI systems and the general public. Understanding these tools is crucial to grasp the trajectory of this technology and, consequently, to forecast the magnitude and nature of the threats they pose. A particularly alarming example is the Internet Research Agency (IRA), a Russian organization known for its deployment of a network of social media bots and troll accounts across platforms such as Twitter and Facebook during the 2016 U.S. Presidential Election. These bots were meticulously programmed to post, like, and share content laden with political charge, amplify specific political messages, and disseminate misinformation. The primary objective of these activities was to foster division, sway public opinion, and potentially influence the outcome of the election.

This case illustrates how the use of non-human actors, such as bots, can significantly amplify manipulation efforts.[24] Another concerning development in this domain is the advent of Deepfake Technology. The manipulated video of Nancy Pelosi, Speaker of the U.S. House of Representatives, is a stark example of its potential applications.[25] Today, there are numerous tools available for creating deepfakes, including open-source software like DeepFaceLab and commercial platforms such as DeepArt.[26] These technologies, capable of generating convincingly altered videos, present a significant threat in terms of spreading false information. The Cambridge Analytica scandal further demonstrates the potential for misuse of information collection and accessibility.[27] This incident revealed how personal data could be exploited to build detailed psychological profiles for targeted political advertising.

Such examples paint a vivid picture of the ecosystem within which these tools can be developed. Given the relative ease of access to many of these technologies, it is apparent that even less organized and less funded groups might implement such technologies. Therefore, it becomes imperative to adopt an approach that recognizes the combination of publicly available software, whether powered by or coordinated with AI, as a potential new frontier in “cyber guerrilla” warfare. In this scenario, indirect AI pollution would likely be the most logical technique. Such a strategy, characterized by practical limitations, could nonetheless be subtly effective. These bots and similar technologies must be viewed as equalizers in the landscape of cyberwarfare. In summary, the current technological landscape presents a scenario where AI and related tools not only have the potential to manipulate public perception but also to democratize the means of conducting cyberwarfare. This new reality necessitates a vigilant and informed approach to understanding and mitigating the risks associated with these technologies.[28]

Countermeasures and Frameworks for Mitigating Risks

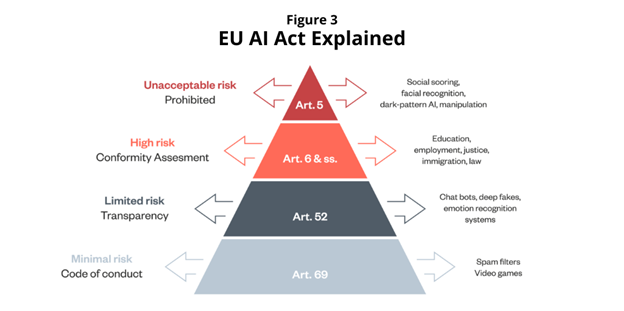

Advances in AI have sparked increasing calls for regulatory oversight. One notable example of these emerging regulations is the proposed EU AI Act, a landmark legislative effort poised to shape the future of AI governance in Europe.[29] This act is pivotal in defining the trajectory of AI development and its integration into society. To comprehend the nuances of the EU AI Act, it is crucial to delve into its operational mechanisms and underlying principles. The EU AI Act primarily aims to categorize and manage AI applications based on their perceived risk. This involves defining High-Risk Applications to foster a more tailored approach to AI safety. The Act’s risk-based framework segregates AI systems into four categories: prohibited, high-risk, limited-risk, and minimal-risk. Each category is subject to varying degrees of regulatory scrutiny and compliance requirements.[30] This structured approach reflects a conscientious effort to harmonize AI advancement with public safety and ethical standards.

A second pivotal aspect of the Act focuses on regulating the development of AI software. This aims to thwart potentially harmful internal development processes and safeguard the rights of European citizens. By setting clear development standards, the Act seeks to ensure that AI systems are designed with integrity and respect for fundamental human rights.[31] Thirdly, the Act incorporates provisions to protect intellectual property, including copyright. This includes establishing a framework for accountability, ensuring that creators and innovators retain their rights and receive due recognition for their contributions.[32] This aspect of the Act underscores the importance of balancing innovation with ethical and legal responsibilities.[33] An essential observation about the EU AI Act is its foundation in a human-centric perspective. This is evident in the criteria used for classifying high-risk applications, such as the impact on fundamental rights, transparency, human oversight, and data sensitivity.[34] While these factors are vital components of any AI regulatory framework, the Act’s approach might be seen as somewhat limited. It does not fully address the potential vulnerabilities that could arise from impeding the development of robust AI systems capable of withstanding adversarial attacks.

This oversight could inadvertently open a window for manipulation, potentially posing a greater threat than the internal risks the legislation seeks to mitigate. The regulations set forth in the Act could potentially decelerate AI development, as adherence to these standards might necessitate additional resources and time.[35] However, it is crucial to recognize that these regulations are intended to prevent the creation of AI systems that could pose significant risks to human health, safety, or fundamental rights. Therefore, a delicate balance must be struck between mitigating external vulnerabilities and ensuring internal safety. In conclusion, while the EU AI Act is a significant step forward in the realm of AI legislation, it necessitates further refinement. The Act should encompass specific regulations for the development of AI Safety, ensuring a more holistic approach that aligns with its overarching objectives. By doing so, the Act can more effectively navigate the complex interplay between technological innovation, public safety, and ethical governance.

Source: Lilian Edwards / Ada Lovelace Institute[36]

In the discourse surrounding AI regulation, it is imperative to adopt a multifaceted approach that encompasses both external threats, as proposed in this paper, and the delicate balance between AI development and citizens’ rights, which is the primary focus of the EU AI Act.[37] Achieving harmony between these two dimensions is a crucial step in the evolution of AI safety strategies. Such strategies must holistically assess risks, considering the rapidly expanding scope of AI applications and their increasingly intricate connections within our societies. This discussion extends beyond merely introducing regulatory measures for a new technology. Rather, it recognizes AI as a novel and dynamic entity that exists in symbiosis with our reality and complex systems. To address this, we propose an approach that is defined as “conduit.” This concept moves beyond restrictive measures, advocating for a methodology that both guides and safeguards AI development. It allows for AI to not only integrate with but also evolve within our complex systems. This adaptive strategy is essential for keeping pace with and effectively responding to the ever-changing landscape of external threats. The conduit approach recognizes AI as an integral part of our socio-technological ecosystem. It underscores the need for regulatory frameworks that are not only preventative but also proactive and flexible, enabling AI to flourish responsibly and ethically while protecting the rights and safety of individuals. This perspective is crucial for the sustainable and secure integration of AI into our daily lives and societal structures.

Conclusion

In conclusion, the rapid advancement of AI and its expanding role in modern society are undeniable.[38] We have embarked on an exploration of AI’s complex nature, uncovering the risks it poses in the realm of information warfare.[39] Central to this new type of warfare is the innovative concept of “AI pollution,” a term introduced to describe the vulnerability of AI systems to deliberately misleading data. This risk is not merely hypothetical; it presents a concrete threat in our current digital era, where AI technologies such as Bing Chat and ChatGPT are increasingly intertwined with the Internet and vast pools of open data.[40]

The accessible nature of AI also means that these current vulnerabilities in AI systems could be exploited by a wide group of malevolent forces, ranging from terrorist networks to antagonistic state actors. Such exploitation could potentially disrupt societal structures and skew public perception, a risk that is amplified considering AI’s increasing integration across multiple sectors, including government and private enterprises. Regulatory frameworks like the EU AI Act represent positive steps towards safer management of AI.[41] Yet, they still lack a holistic approach to covering the full spectrum of risks associated with AI, especially against sophisticated adversarial AI tactics.

This shortcoming underscores the need for a more comprehensive and adaptable regulatory approach that does not slow down the development of AI safety and thus weaken the safeguards it tries to impose. The “conduit” approach proposed in this paper advocates for a regulatory method that not only channels and controls AI development but also facilitates its harmonious integration and evolution in the face of external threats, while maintaining balance with our complex socio-technological landscapes and human rights. Ultimately, the future of warfare will inevitably involve AI. We are witnessing the debut of a new technology that will revolutionize our world well beyond what seemed possible just 10 years ago.[42] For this reason, it is imperative that we develop strategies that not only focus on the technical aspect but also take a step back to look at the whole picture, in order to truly understand the magnitude of what we have in front of us.

[1] “AI Dossier: Government & Public Services,” Deloitte, https://www2.deloitte.com/us/en/pages/consulting/articles/ai-dossier-government-public-services.html.

[2] Hohenstein, Jill; Kizilcec, René F.; DiFranzo, Dominic. “Artificial intelligence in communication impacts language and social relationships.” Scientific Reports, Nature, 2023. https://www.nature.com/articles/s41598-023-28293-4.

[3] “Ukraine uses drones equipped with AI to destroy military targets,” EuroMaidan Press, October 6, 2023, https://euromaidanpress.com/2023/10/06/ukraine-uses-drones-equipped-with-ai-to-destroy-military-targets/.

[4] University of Maryland’s Applied Research Laboratory for Intelligence and Security (ARLIS), “Cognitive Security,” ARLIS, 2023. https://www.arlis.umd.edu/our-mission/cognitive-security.

[5] “EU AI Act Regulation,” The New York Times, December 8, 2023, https://www.nytimes.com/2023/12/08/technology/eu-ai-act-regulation.html.

[6] “Artificial Intelligence in Business: Trends and Predictions.” Business News Daily, 2023. https://www.businessnewsdaily.com/9402-artificial-intelligence-business-trends.html.

[7] BriA’nna Lawson, “Enhancing Everyday Life: How AI is Revolutionizing Your Daily Experience,” Morgan State University, November 21, 2023, www.morgan.edu.

[8] How Bing Chat Enterprise works with your data using GPT-4,” Microsoft Tech Community, https://techcommunity.microsoft.com/t5/microsoft-mechanics-blog/how-bing-chat-enterprise-works-with-your-data-using-gpt-4/ba-p/3930547

[9] “Gradient Descent,” IBM, https://www.ibm.com/topics/gradient-descent#:~:text=Gradient%20descent%20is%20an%20optimization,each%20iteration%20of%20parameter%20updates.

[10] Lectures on Imbalance, Outliers, and Shift,” Data-Centric AI, CSAIL, MIT, https://dcai.csail.mit.edu/lectures/imbalance-outliers-shift/

[11] Chemical, Biological, Radiological, and Nuclear Consequence Management,” Environmental Protection Agency (EPA), https://www.epa.gov/emergency-response/chemical-biological-radiological-and-nuclear-consequence-management.

[12] “Special Report 119,” United States Institute of Peace, https://www.usip.org/sites/default/files/sr119.pdf.

[13] “AI Needs Data More Than Data Needs AI,” Forbes Tech Council, Forbes, October 5, 2023, https://www.forbes.com/sites/forbestechcouncil/2023/10/05/ai-needs-data-more-than-data-needs-ai/.

[14] “Artificial Intelligence in Governance: A Comprehensive Analysis of AI Integration and Policy,” Medium, https://oliverbodemer.medium.com/artificial-intelligence-in-governance-a-comprehensive-analysis-of-ai-integration-and-policy-8fc1a4a342c5.

[15] IEEE Spectrum, “In 2016, Microsoft’s Racist Chatbot Revealed the Dangers of Online Conversation,” IEEE Spectrum, https://spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation.

[16] “Police Violence Prediction: NDAS,” Wired UK, https://www.wired.co.uk/article/police-violence-prediction-ndas.

[17] “10 Real-world Examples of AI in Healthcare,” Philips, November 24, 2022, https://www.philips.com.au/a-w/about/news/archive/standard/news/articles/2022/20221124-10-real-world-examples-of-ai-in-healthcare.html.

[18] “AI Hype Has Corporate America Talking Big About Its Tech,” The Washington Post, August 24, 2023, https://www.washingtonpost.com/technology/2023/08/24/ai-corporate-hype/.

[19] SuneraTech Blog, “AI & ML and How They Are Applied to Facial Recognition Technology,” Accessed October 22, 2021, https://www.suneratech.com/blog/ai-ml-and-how-they-are-applied-to-facial-recognition-technology/.

[20] “AI Investment Forecast to Approach $200 Billion Globally by 2025,” Goldman Sachs, August 1, 2023, https://www.goldmansachs.com/intelligence/pages/ai-investment-forecast-to-approach-200-billion-globally-by-2025.html.

[21] “What Bing’s Chatbot Can Tell Us About AI Risk (and What It Can’t),” 80,000 Hours, March 5, 2023, https://80000hours.org/2023/03/what-bings-chatbot-can-tell-us-about-ai-risk-and-what-it-cant/.

[22] “AI Chatbots: A Comprehensive Review of Architectures and Dialogue Management,” Springer. Accessed December 8, 2023, https://link.springer.com/article/10.1007/s10462-023-10466-8.

[23] Council of Europe, “A Study of the Implications of Advanced Digital Technologies, Including Artificial Intelligence (AI) and Internet of Things (IoT).” Accessed December 8, 2023, https://rm.coe.int/a-study-of-the-implications-of-advanced-digital-technologies-including/168096bdab.

[24] BuzzFeed News, “A Detailed Look at How the Russian Troll Farm Worked in 2016.” Accessed December 8, 2023, https://www.buzzfeednews.com/article/ryanhatesthis/mueller-report-internet-research-agency-detailed-2016.

[25] “Artificial Intelligence Creeps into Daily Life,” Reuters. Accessed December 8, 2023, https://www.reuters.com/article/idUSL1N36V2E0.

[26] FormatSwap Blog, “How to Create a Deepfake Video Using DeepFaceLab.” Accessed December 8, 2023, https://formatswap.com/blog/machine-learning-tutorials/how-to-create-a-deepfake-video-using-deepfacelab/.

“Cambridge Analytica Scandal Fallout,” The New York Times. Accessed December 8, 2023, https://www.nytimes.com/2018/04/04/us/politics/cambridge-analytica-scandal-fallout.html.

[28] Security Magazine, “The Role of Bots in API Attacks.” Accessed December 8, 2023, https://www.securitymagazine.com/articles/98546-the-role-of-bots-in-api-attacks.

[29] European Parliament, “EU AI Act: First Regulation on Artificial Intelligence.” Accessed December 9, 2023, https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence.

[30] Center for Security and Emerging Technology (CSET) at Georgetown University, “The EU AI Act: A Primer.” Accessed December 9, 2023, https://cset.georgetown.edu/article/the-eu-ai-act-a-primer/.

[31] World Economic Forum, “European Union AI Act Explained.” Accessed December 9, 2023, https://www.weforum.org/agenda/2023/06/european-union-ai-act-explained/.

[32] Morrison & Foerster, “EU AI Act: The World’s First Comprehensive AI Regulation.” Accessed December 9, 2023, https://www.mofo.com/resources/insights/230811-eu-ai-act-the-world-s-first-comprehensive-ai-regulation.

[33] Council of the European Union, “Artificial Intelligence Act: Council calls for promoting safe AI that respects fundamental rights,” December 6, 2022, https://www.consilium.europa.eu/en/press/press-releases/2022/12/06/artificial-intelligence-act-council-calls-for-promoting-safe-ai-that-respects-fundamental-rights/.

[34] European Parliament, “Texts Adopted – Thursday, 4 May 2023 – Artificial Intelligence Act.” Accessed December 9, 2023, https://www.europarl.europa.eu/doceo/document/TA-9-2023-0236_EN.pdf.

[35] “EU’s new AI Act risks hampering innovation, warns Emmanuel Macron,” Financial Times.

Accessed December 9, 2023, https://www.ft.com/content/9339d104-7b0c-42b8-9316-72226dd4e4c0.

[36] https://www.adalovelaceinstitute.org/resource/eu-ai-act-explainer/

[37] European Parliament, “Artificial Intelligence Act: Deal on Comprehensive Rules for Trustworthy AI.” Accessed December 9, 2023, https://www.europarl.europa.eu/news/en/press-room/20231206IPR15699/artificial-intelligence-act-deal-on-comprehensive-rules-for-trustworthy-ai.

[38] Brookings Institution, “How Artificial Intelligence is Transforming the World.” Accessed December 9, 2023, https://www.brookings.edu/articles/how-artificial-intelligence-is-transforming-the-world/.

[39] RAND Corporation, “Monograph Report on Artificial Intelligence.” Accessed December 9, 2023, https://www.rand.org/pubs/monograph_reports/MR661.html.

[40] The Verge, “OpenAI ChatGPT Live Web Results Browse with Bing.” Accessed December 9, 2023, https://www.theverge.com/2023/9/27/23892781/openai-chatgpt-live-web-results-browse-with-bing.

[41] Atlantic Council, “Experts React: The EU Made a Deal on AI Rules, But Can Regulators Move at the Speed of Tech?” Accessed December 10, 2023, https://www.atlanticcouncil.org/blogs/new-atlanticist/experts-react/experts-react-the-eu-made-a-deal-on-ai-rules-but-can-regulators-move-at-the-speed-of-tech/.

[42] “Artificial Intelligence: The Decade of Transformation,” CNN. Accessed December 10, 2023, https://edition.cnn.com/2019/12/21/tech/artificial-intelligence-decade/index.html.