Imagine a world where decisions that significantly impact your life are made without any explanation. A judge issues a verdict, a loan application is denied, or you are flagged for security—all under the enigmatic influence of an invisible authority. This unsettling reality mirrors the current state of Artificial Intelligence (AI), where complex algorithms function as inscrutable oracles. Their decision-making processes, shrouded within a “black box”, lacking any form of human oversight. This lack of human oversight is why we must strive for transparency and accountability in AI to ensure that these digital arbiters of our lives operate not as inscrutable entities but as understandable and controllable tools.

AI is projected to contribute an astounding US$15.7 trillion to the global GDP by 2030.[1] However, trust in this transformative technology is wavering. Prominent studies, such as the Edelman survey, reveal a 26-point gap between trust in the tech industry (76%) and AI (50%). Over the past five years, global trust in AI has declined from 61% to 53%. The potential economic impact of these unaddressed “black box” issues in AI could be staggering, potentially undermining the vast benefits that AI promises.[2]

This article underscores the critical need for “Explainable Artificial Intelligence (XAI)”. It highlights the potential for the Gulf region to pioneer in the realm of ethical AI governance. By addressing the opacity of AI decision-making processes, XAI can help bridge the trust gap, ensuring that the immense potential of AI can be fully realized in a manner that is ethical, transparent, and aligned with the region’s values.

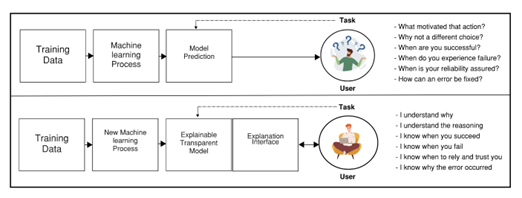

Figure 1: The “Black Box” of AI

Source: The Author

What is the “Black Box” issue and why is this worrying?

The elusive nature of AI decision-making relates to the lack of transparency and difficulty in understanding how AI systems make decisions, commonly known as the “black box problem”.[3] In this context, the term “black box” refers to complex algorithms whose decision-making process is obscured. We can see the inputs and outputs, but the internal workings remain unknown, posing a transparency challenge in AI.

Deep learning is one of the most prevalent forms of AI, operating similarly to how humans learn by example. Just as we teach children by showing them the correct methods, deep learning algorithms are trained using labeled data. More so, neural networks within deep learning systems learn to recognize patterns and features from these examples. Over time, they develop decision protocols for categorizing new experiences. But unlike humans, deep learning systems do not provide insight into how they arrive at their conclusions, hence the term ‘black box’.

Unraveling the intricacies of AI algorithms poses a significant challenge, particularly when they make mistakes, given their lack of transparency in the decision-making process. This opacity can hinder our trust in these AI systems, as the rationale behind their decisions remains elusive. This lack of explainability can lead to serious consequences, which are becoming increasingly well-documented.

- Deepfakes and Generative Adversarial Networks (GANs) are AI models that have the capacity to fabricate highly authentic and manipulative audio-visual content. The creation of such convincing deepfakes brings to light concerns about potential disinformation campaigns, diminishing trust in media, and potential threats to national security. Given that GANs often operate as “black boxes”, it becomes imperative to effectively interpret their content generation process and identify any biases present within the training data.

- Facial Recognition Systems are becoming increasingly prevalent, and are used for security, surveillance, and even social media applications. These systems can be inaccurate, particularly for people coming from a different ethnic demographic. The black box nature of many facial recognition systems makes it difficult to understand how they arrive at their decisions and identify potential biases within the algorithms. In 2018, a facial recognition system was used to identify criminals. The system misidentified several innocent people, leading to a wrongful arrest.[4]

- Autonomous driving vehicles can highlight the “black box” problem in AI, where the decision-making process of an AI system is not understandable to humans. An autonomous-driving car, operating in autopilot mode was involved in a tragic incident that occurred in 2019. In this instance, the car’s AI system failed to identify a tractor-trailer crossing the highway and decided to continue driving. The car went under the trailer, resulting in a fatal accident. In this case, the AI system made a decision that led to a fatal outcome, but it’s not clear why it failed to recognize the trailer as an obstacle.[5]

- Predictive policing involves using algorithms to analyze mass amounts of information to predict and prevent potential crimes. In 2019, it was found that some of these predictive policing models were biased against certain groups of people, leading to the wrongful arrest of several innocent people. The AI Now Institute describes that some U.S. police departments derived their data from biased sources to inform their predictive policing systems.[6] This incident underscores the “black box” problem in AI, where the decision-making process of an AI system is not transparent to humans. In this case, the AI system made decisions that led to wrongful arrests, but it is not clear why it flagged certain individuals as being at risk of committing crimes.

What is XAI and why do we need it?

Explainable Artificial Intelligence (XAI) is a subfield of AI that focuses on making AI systems more interpretable and understandable to humans. This is important for several reasons. Firstly, the interpretability of XAI is instrumental in debugging AI systems. By comprehending the rationale behind an AI system’s decision, we can pinpoint and rectify errors with greater ease. Secondly, the transparency provided by XAI fosters trust in AI systems. When we grasp the workings of an AI system, we are more inclined to entrust it with decision-making responsibilities. Lastly, the interpretability of XAI enhances our communication with AI systems. A clear understanding of an AI system’s operations allows us to convey our requirements and expectations more effectively. Thus, XAI serves as a crucial bridge between human users and AI systems, facilitating error correction, trust-building, and effective communication.

Figure 2: Responsible AI Workflow

Source: The Author

What are others doing in dealing with the Black Box issue?

In a move towards greater transparency, the European Union’s (EU) new AI Act of April 2024 is attempting to tackle the opaqueness of “black box” AI systems by demanding “explainability” for high-risk applications. This will reshape how AI will be developed and deployed across not only the EU but with potential ripple effects across the Middle East and beyond, just as GDPR did.

The EU’s AI Act represents a landmark development in regulating AI development and use. By mandating explainability for high-risk AI, the Act aims to ensure these systems are fair, unbiased, and accountable.[7] As the EU is a major economic and technological player, its regulations will likely influence international standards and establish a global benchmark for responsible AI development, just like the General Data Protection Regulation (GDPR) EU legal framework did.

The Gulf region, looking to attract international investment and partnerships in AI, may find itself under pressure to adopt similar transparency measures. Indeed, if the EU demonstrates that explainable AI can be positively implemented, it could encourage the Gulf region to prioritize similar approaches. This could be particularly important for sectors like healthcare, finance and security, where public trust in AI will be crucial for adoption.

Overall, the EU’s AI Act is a significant development with the potential to shape the global landscape of AI governance. While the immediate impact might be most felt within the EU, the focus on explainability could serve as a model for other regions, including the Gulf. The success of the EU’s approach will depend on its ability to foster trust and encourage international cooperation in developing responsible AI frameworks.

Can the pace of XAI keep up with the pace of change in AI?

The breakneck speed of AI, with constantly evolving models churning out new algorithms and architectures, is a moving target for researchers that can effectively explain Black Box with new XAI techniques. New AI models might require entirely new approaches to explainability, straining the resources of the XAI research community.

While there are promising advancements in XAI research and growing demand for explainability, these have not yet translated into widespread adoption of robust XAI techniques across the entire spectrum of AI models. Standardization efforts are underway but have not yet yielded universally accepted frameworks.[8] Current methods like LIME and SHAP, while powerful, have difficulty explaining complex deep learning models, which are a cornerstone of modern AI.[9] Interestingly, Elon Musk’s new company, “xAI”, has the potential to advance explainable AI research, it is early days and specifics about their approach are limited. However, the leadership of AI safety expert Dan Hendrycks at the ‘Center for AI Safety’ could influence “xAI” research towards more transparent and accountable AI systems.

In sum, the evidence suggests that, for now, Explainable AI is struggling to keep pace with the rapid advancements in AI development. The inherent complexity of modern AI models, the ever-evolving nature of the field, and the continued prioritization of performance over explainability all pose significant challenges. However, the growing demand for transparency and increased research efforts offer a glimmer of hope. Continued investment in XAI research, industry collaboration, and a shift in focus towards Explainable AI development alongside performance metrics are crucial for narrowing the gap.[10]

What are the socio-economic arguments for Explainable AI?

Explainable AI offers a range of socioeconomic benefits that can significantly impact the development of the Gulf region.

- Transparency and Trust: Opaque AI systems can raise concerns about bias, fairness, and accountability in decision-making processes, which can lead to a decline in public trust in AI and hinder its adoption across crucial sectors. However, with XAI, it can increase trust and wider acceptance of AI, leading to significant economic gains. XAI can foster trust by allowing users to understand how AI reaches conclusions, making them more comfortable with its integration into areas like Public Services for instance. A 2021 report by the World Economic Forum highlights the potential of AI to improve healthcare delivery, education, and social services.[11] Public trust in the fairness and explainability of these AI-powered services is crucial for their successful implementation.

- Economic Growth and Job Creation: Concerns about job displacement due to automation may create resistance to AI adoption, but XAI could create new job opportunities as discussed below. A 2020 study by the Brookings Institution estimates that the net impact of AI on jobs will be positive, creating more jobs than it displaces.[12] However, this requires a smooth transition for displaced workers and retraining programs. XAI can play a role in this by enabling a better understanding of how AI automates tasks and facilitating the development of reskilling programs.

-

- XAI Development: The demand for XAI specialists who can design, develop, and implement explainable AI models is expected to rise significantly.

- AI Auditing: As AI systems become more complex, the need for experts to audit and explain their decision-making processes will grow.

- Explainable AI Communication: The ability to effectively communicate complex AI outputs to stakeholders will be a valuable skill in various sectors.

- Innovation and Productivity Gains: XAI can empower developers to understand how AI models arrive at decisions, allowing for:

-

- Improved Model Performance that identifies biases and inefficiencies within AI models that can help developers improve their accuracy and effectiveness.

-

- Faster Development Cycles: XAI can streamline the development process by providing insights into how models function, leading to faster iterations and improvements. A 2019 McKinsey report suggests that AI can potentially increase global productivity by up to 1.5% annually by 2030.[13] XAI can contribute to this growth by ensuring that AI models are functioning at their peak capacity.

Towards a regional XAI framework

The complexity of AI models often makes them appear as a “black box”, limiting trust in their reliability. This is where XAI comes into play and an executable XAI regional framework is greatly needed, which should typically look at:

- Ethical Regulation of AI: A specialized AI Ethics group established within the AI Regulatory Body. This committee would focus on developing ethical guidelines and standards for AI, while the Regulatory Body would oversee compliance with these guidelines.

- Creating an XAI Innovation Ecosystem: The framework encourages fostering a regional culture of innovation and collaboration among academia, industry, and government across the Gulf region. This ecosystem could accelerate the development of unique and effective XAI solutions.

- Developing XAI Talent: Investment in education and training programs could help build a skilled workforce capable of developing and implementing effective XAI solutions.

- Promoting Research and Development in XAI: The GCC has shown a commitment to AI research and development already. This commitment could be extended to include a specific focus on XAI, encouraging collaboration between academia, industry, and government in this area.

- Enhancing XAI Education and Awareness: Emphasizing the importance of promoting an understanding and awareness of XAI among policymakers, businesses, and the public through structured, focused XAI programs.

Conclusion

Without the implementation of XAI, the future could be fraught with challenges. Some experts warn that a lack of human scrutiny could inevitably lead to failures in usability, reliability, safety, and fairness making it a basis for a moral crisis for AI. Without a deep understanding of why XAI is a key influence on how future generations accept AI, businesses could fall victim to heightened scrutiny in the coming years. This lack of understanding presents numerous problems with serious consequences, including potentially catastrophic errors when flawed models (or decisions based on them) are deployed in real-world contexts. Therefore, the development and implementation of an XAI framework are not just beneficial but crucial for the ethical, fair, and effective use of AI technologies in the future. It is a key step towards building a society where AI and humans can work together in harmony, with mutual trust and understanding.

[1] PWC, “Sizing the Price: PWC Global Artificial Intelligence Study,” 2024, https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html.

[2] Edelman, R., “Technology Industry Watch Out, Innovation at Risk,” Edelman, March 5, 2024, https://www.edelman.com/insights/technology-industry-watch-out-innovation-risk.

[3] Coolidge, M., “The Danger of AI’s Black Box,” Casper Labs, March 22, 2024, https://casperlabs.io/blog/the-danger-of-ais-black-box.

[4] Snow, J., “Amazon’s Face Recognition Falsely Matched 28 Members of Congress With Mugshots,” ACLU, 2018, https://www.aclu.org/news/privacy-technology/amazons-face-recognition-falsely-matched-28.

[5] Thadani, T. et al., “The final 11 seconds of a fatal Tesla Autopilot crash,” The Washington Post, October 6, 2023,

https://www.washingtonpost.com/technology/interactive/2023/tesla-autopilot-crash-analysis/.

[6] Lau, T., “Predictive Policing Explained,” Brennan Center for Justice, April 1, 2020,

https://www.brennancenter.org/our-work/research-reports/predictive-policing-explained.

[7] Explainable AI: the basics, (The Royal Society: London, 2019), https://ec.europa.eu/futurium/en/system/files/ged/ai-and-interpretability-policy-briefing_creative_commons.pdf.

[8] “Explainable Artificial Intelligence (XAI),” DARPA, 2024, https://www.darpa.mil/program/explainable-artificial-intelligence.

[9] Lundberg, S. & Lee, S.-i., “A Unified Approach to Interpreting Model Predictions,” Artificial Intelligence, May 22, 2017, https://www.arxiv.org/abs/1705.07874.

[10] “Statement on AI Risk,” Center for AI Safety, 2024, https://www.safe.ai/work/statement-on-ai-risk.

[11] World Economic Forum, “The Global Risks Report: Insight Report,” January 19, 2021, https://www.weforum.org/publications/the-global-risks-report-2021/.

[12] Bell, S. A. & Korinek, A., “AI’s economic peril to democracy,” Journal of Democracy, Brookings, March 14, 2024, pp. 1-15, https://www.brookings.edu/articles/ais-economic-peril-to-democracy/.

[13] McKinsey&Company, “Jobs Lost, Jobs Gained: Workforce Transitions in a Time of Automation,” McKinsey Global Institute, 2017, https://www.mckinsey.com/~/media/mckinsey/industries/public%20and%20social%20sector/our%20insights/what%20the%20future%20of%20work%20will%20mean%20for%20jobs%20skills%20and%20wages/mgi-jobs-lost-jobs-gained-executive-summary-december-6-2017.pdf